Continuous testing is a process that empowers teams to build quality into software development and accelerate the delivery of high-quality customer experiences. With continuous testing, teams get instant feedback on code health using automated testing.

Continuous testing allows organizations to assess business risk. Recent industry surveys show the top metrics used to track project progress and success:

- High test coverage

- Increased defect remediation

- Decreased defects in production

Building Quality Into the Development Process

Quality is an important topic for senior levels of management. Here are some insightful findings of business leaders polled:

- 48% said quality improvement was in their top three initiatives.

- 68% were seeking ways to improve delivery speed and quality.

The new goal? Build quality into the development process to accelerate the delivery of high-quality customer experiences.

How is this combination of speed and quality achieved? Continuous testing. But it does come with its challenges.

Overcoming Continuous Testing Obstacles

With quality at speed as the goal, there are typically three primary obstacles to overcome when implementing continuous testing.

- Lack of expertise. The team may lack in availability to take on new approaches and skills needed to learn and adopt new tools and techniques.

- Unstable execution. Test automation as it currently stands in the organization may be unstable and unreliable. As code grows, so does execution time.

- Unavailable environment. The test environment is often unavailable, uncontrollable, and constrained by system dependencies.

Teams must remove these obstacles for continuous testing to become ingrained in the development culture of a software organization.

Obstacle 1: Lack of Expertise on the Team

Initially, the lack of expertise isn’t only the team’s lack of knowledge and skills. It’s also limitations of tools being used.

Consider user interface (UI) test automation. It’s a common practice and reliable, but reusable automation is difficult. Selenium is the de facto standard. While open source and free, it has its own adoption curve, and it takes experience and time to master.

Selenium tests can be unreliable and what’s recorded one day, can’t be played back the next. Test maintenance becomes a growing issue as more UI tests are automated. Selenium requires further tools support to become easier to use and maintain.

Service level or API testing is a relatively new, but valuable practice. However, it sits in a no man’s land between developers and testers. Developers understand the APIs the best but aren’t motivated nor compelled to test them and testers lack the knowledge needed to do API testing.

The number one challenge when organizations are trying to adopt API testing, is understanding how the APIs are actually used. This isn’t to say APIs aren’t documented or designed well. Rather, there isn’t much information about how the services are being used together in a use case, workflow, or scenario.

lack

In addition, it’s important that API test automation goes beyond record (during operation) and playback (for testing). Modeling the behavior and the interactions between APIs are needed, as is using these interactions to steer test creation and management processes.

Performance testing is often seen as something that’s done by another team in the organization, perhaps performed as a check box item. But when performance issues arise, product development may have moved forward, and a disruptive rollback may be required.

Ideally, performance testing needs to be done earlier in the software development process and leverage the work already being done with automated functional testing. At the same time, the team is adopting API testing, they can leverage that work to enable performance testing to shift left, making it a joint responsibility of developers and testers.

How to Remove: Simplify Test Automation

The lack of expertise and training in the development and test team should not reflect on the team itself, but rather on the complexity when adopting test automation and associated tools. There are solutions available that strive to simplify test automation. These solutions make adoption less disruptive and integrate better into existing processes.

Create reusable, maintainable, and understandable test scripts. Parasoft Selenic solves the main issues with Selenium adoption: test creation and maintenance. By recording UI interactions via the Chrome browser, Selenium test cases are automatically created based on these interactions. In addition, locators are recorded using the page object model to be more resilient to changes in the UI. Selenic uses AI-driven self-healing of tests so that when UI changes break existing tests, the tool makes intelligent assumptions to prevent the test cases from failing.

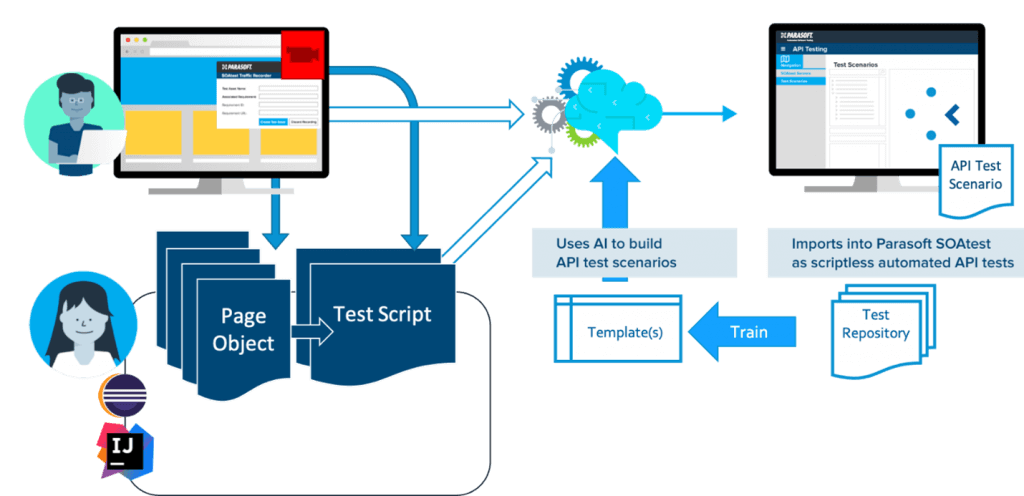

Model real-world API test scenarios by recording manual and automated UI interactions. API test adoption is hindered by the ability to create tests. Parasoft SOAtest uses existing UI tests (including Selenic-created tests) to record API interaction happening during execution of the application. AI within SOAtest organizes these recorded interactions into recognizable scenarios which then form the basis of an API test repository. These API scenarios can be played back, edited, cloned, and reused to form a comprehensive API test suite. The automation and AI-powered decision making SOAtest does to make API testing easier to adopt, use, and maintain. Plus, it helps bridge the API testing knowledge gap in the development team.

Reuse existing test artifacts to efficiently scale load, performance, and security testing as part of DevOps pipeline. As the development team becomes more proficient at test automation for the UI and API levels, the test repository becomes an important reusable resource. Tests can be reused for load and performance testing and to increase use case and code coverage.

Obstacle 2: Execution of Tests Is Unstable, Unreliable, & Takes Too Long to Run

Understandably, software organizations expect automated tests to be efficient and not impede development progress. However, as test suites grow, so do the problems with maintaining and executing them. Tests, like code, are impacted by change. New functionality added during a sprint can significantly impact the user interface or the workflows of the application. These changes break existing tests, making them unstable. It’s important to address those as quickly as possible.

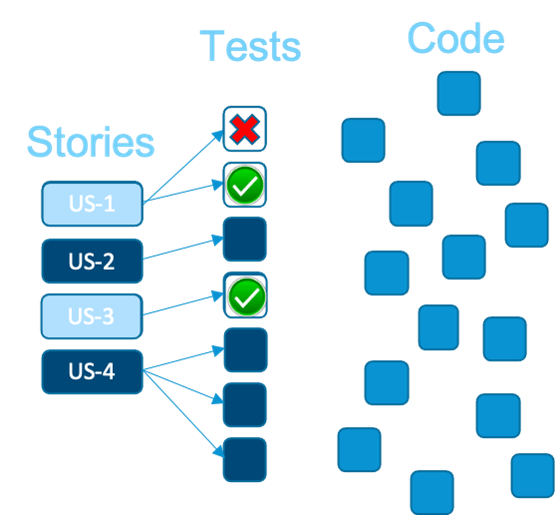

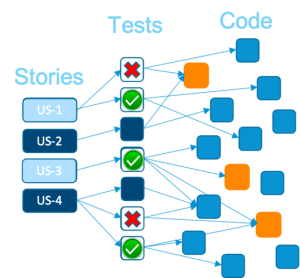

When tests fail, you need to understand the context of the failure. Not every test failure is equal. Some use cases are more important than others or, perhaps, some tests are unstable by nature. What is lacking is an understanding of the impact of test failures or test instabilities on the business priorities of the application. Investigating these constant test failures becomes a distraction from the overall test automation strategy. Correlation between business requirements and tests is critical to ensure that the value of automation is realized.

Another obstacle is the actual execution time for test suites. As the test portfolio grows, so does the execution time beyond a reasonable waiting period for feedback. Quick feedback to change is essential for a successful CI/CD pipeline so test efficiency and focus are required.

How to Remove: AI-powered Test Execution

The solution to the test execution obstacle is to test smarter with AI. This means leveraging test automation AI to make tests more resilient to change and to target execution on key tests only.

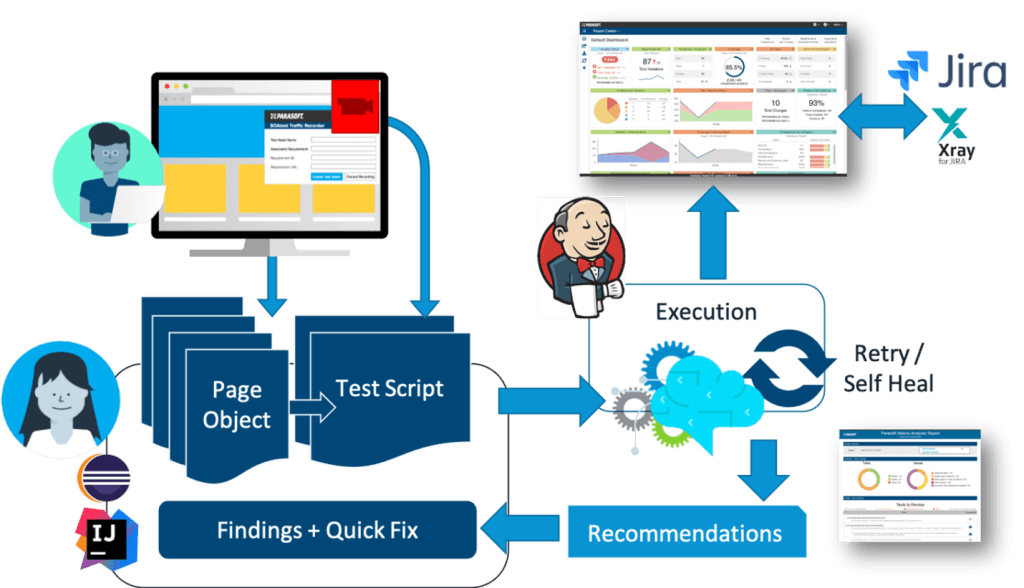

Smart UI testing with Selenium and Selenic. Using AI, Parasoft Selenic self-heals tests when UI changes are detected. These are automatically used but recommendations are sent to the developer to help fix the tests. These fixes can be automatically applied to the Selenium tests, removing manual debug and code changes.

Plan work item tests based on impacted requirements. To prioritize test activities, correlation from tests to business requirements is required. Keeping track of user stories and requirements provides real-time visibility into the quality of the value stream. User stories and requirements should be reviewed for priority. The traceability capabilities in Parasoft SOAtest are used to plan execution for tests that validate items being worked on in-sprint. However, more is required since it’s unclear how recent changes have impacted code.

Use test impact analysis to validate only what has changed. To fully optimize test execution, it’s necessary to understand the code that each test covers, then determine the code that has changed. Parasoft tools provide this capability through a central repository for test results and analysis. Test impact analysis allows testers to focus only on the tests that validate the changes.

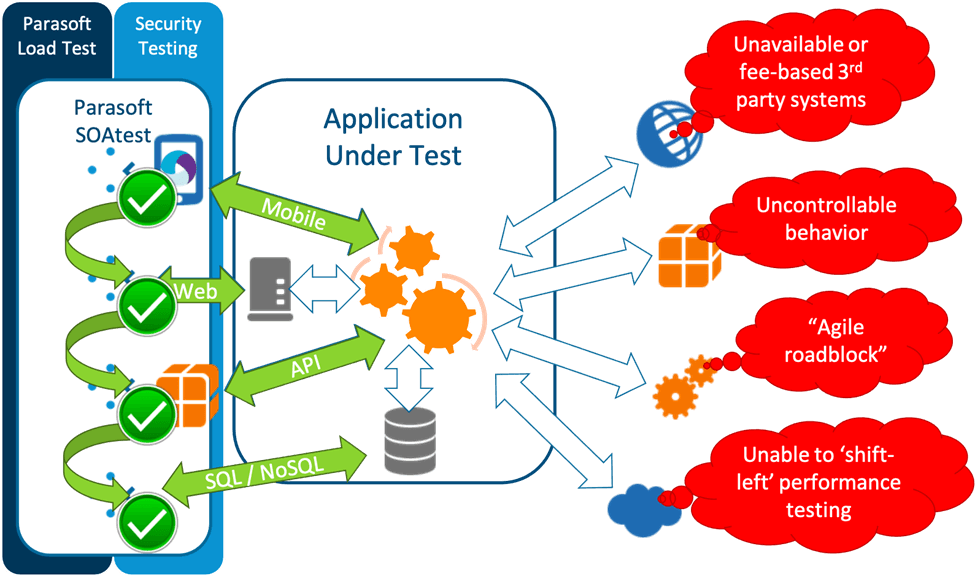

Obstacle 3: Test Environment Is Unavailable, Uncontrollable, & Constrained by System Dependencies

The testing environment is the linchpin of the obstacles stopping organizations from turning automated into continuous testing. There are three types of challenges that organizations face when trying to make tests run anytime, anywhere, and dealing with the external dependencies of the application. This is especially true for a microservices architecture. The number of dependencies explodes due to the very nature of the design.

Test Environment Challenges

Waiting for access to a shared system, like a mainframe or an external dependency provided by a third party. Availability might be time limited and costly. It’s also a challenge if the external dependency is heavily loaded with multiple people working on it at the same time, resulting in test instability from data collisions.

Bottlenecks caused by delayed access. This is due to the nature of parallel development and typical of modern processes. For example, multiple teams are collaborating to deliver new features to the value stream such as interdependent microservices. Testing can’t proceed on one microservice because another isn’t available yet.

Uncontrollable test data. Although microservices are relatively easy to deploy and test in isolation, their dependencies on data or performance characteristics limit the ability of them to be tested thoroughly. For example, reliance on data in a shared production database can limit the ability to test services.

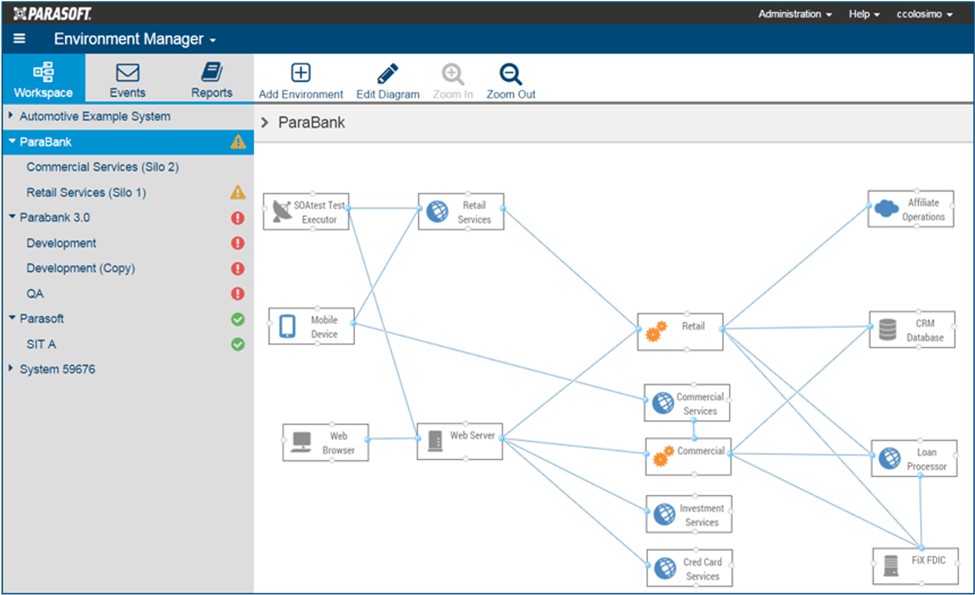

How to Remove: Control the Test Environment

Start simulating these dependencies to give the team full control using a service virtualization solution. Parasoft Virtualize simulates services that are out of your control or unavailable. It provides workflows that:

- Enable users to access complete and realistic test environments.

- Stabilize their test environment.

- Get access to otherwise inaccessible dependencies.

- Manage the complex business logic, test data, and performance characteristics required for virtual services to behave just like real services in the real environment they represent.

Service virtualization removes the bottlenecks. Here’s how.

Record and simulate: Capture, model, and provision simulations of live systems.

Using the recording capability of Parasoft SOAtest, it’s possible to capture the behavior of the application in its environment. Parasoft Virtualize models the behavior of external dependencies making it possible to remove and simulate the behavior of dependencies, dynamically on the fly, switching out real versus virtual. Making these services and dependencies available and stable, virtually, accelerates the testing process and enables continuous testing.

Deliver a prototype first: Model behavior based on contract descriptions or payload examples.

Service virtualization enables prototype development based on the contract descriptions derived from the API interaction recordings and analysis in SOAtest.

Dependent services can be simulated with good fidelity to create prototype versions that satisfy their roles in the system when testing another adjacent service. This removes the schedule limitation inherent in parallel development—even when services aren’t complete, they can be virtualized for testing other services.

Synthesize private test data.

Another obstacle in testing enterprise applications is test data. Many organizations use real data, but this is fraught with privacy concerns. Purely synthetic data is often not realistic enough to test with so a compromise is needed. Synthesizing real data by removing personally identifiable information (PII) provides realistic and safe-to-use data. Test data management is required in conjunction with service virtualization to provide a realistic, highly available test environment that won’t result in any privacy compromises.

The Benefits of Continuous Testing

Removing the key obstacles to continuous testing enables testing to occur on a regular, predictable schedule. It transforms application testing empowering teams to:

- Test earlier. Shift left to in-sprint testing where it’s quicker, cheaper, and easier to remediate the problem.

- Test faster. Automate and execute continuously to get immediate feedback when defects are introduced.

- Test less. Focus and spend less time creating, maintaining, and executing test scenarios. Reduce the cost of test infrastructure.

Want to learn some more about continuous testing? Join us at EuroSTAR in September, for 3 days of talks, tutorials, and lots more from leading test experts. Check out the full programme.

Parasoft is exhibiting at EuroSTAR Conference this year – take a look at their work here.

Author

Mark Lambert, VP of Strategic Initiatives at Parasoft

Mark focuses on identifying and developing testing solutions and strategic partnerships for targeted industry verticals to enable clients to accelerate the successful delivery of high quality, secure, and compliant software. Since joining Parasoft in 2004, Lambert has held several positions, including VP of Professional Services and VP of Products. Lambert is a public speaker and author. He’s been invited to speak at industry events such as JavaOne, Embedded World, AgileDevDays, and StarEast/StarWest. He has published thought-leadership articles in SDTimes, DZone, QAFinancial, and Software Test & Performance. Lambert earned both his Bachelor’s and Master’s degrees in Computer Science at Manchester University, UK.