One of my childhood superheroes was the Terminator. The machine sent from the future to save the future. I remember tears in my eyes when the T-800 was lowering himself to the melted metal. Do you remember what he said before he did it? What were the last words he said to John Connor?

The Terminator: I now know why you cry, but it is something I can never do.

Was the Terminator true AI? Yes, he was. Did he have limitations? Yes, he did. Could he do everything that a human could do? No, he couldn’t.

As you know AI doesn’t exist. Period. If any of the test automation tools out there claims that they have AI, they are simply lying. They might have smart algorithms, advanced object identification methods but they are only as good as the developers who wrote them. That is not Artificial Intelligence. That is Human Intelligence wrapped in nice algorithms. And like the T-800 it can have its flaws and cannot fully mimic a human user. If the true AI would in fact exist, then would there be a need for any test automation platform at all? If the true AI would exist wouldn’t it just upgrade the test automation tool itself to work faster, better, more reliable? That would be a true AI.

Smart algorithms allow to work faster, but…

The greatly advertised AI functionalities of your new testing tool are nothing else than algorithms which allow to automate the manual steps you would need to take. Imagine that each time you want to find an object you need to go through the whole html tree and see what the best way is to identify it. The smart algorithms will do it for you, they will be faster, but they will only follow a pattern or in other words – follow the identification methods that their inventor thought of. That means that there might be edge cases where you will still need to do some manual work as the “AI” didn’t consider your pattern/scenario. Either way they will increase your productivity, you just need to keep in mind that they will not do everything for you.

Use “Smart” where it matters

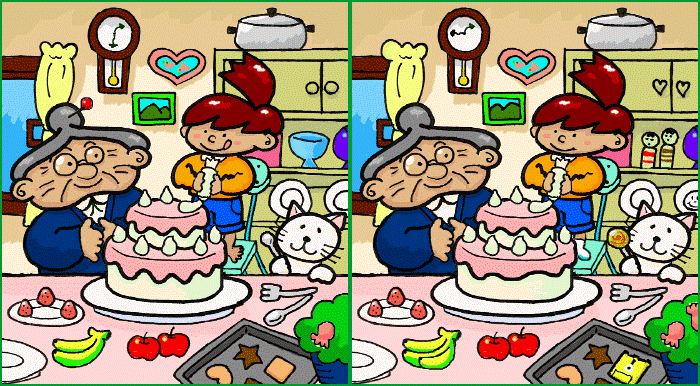

Consider the following scenario: you are manually executing a test case which checks the login functionality to your standalone software. You see the UserName and the Password field along with a Login button. So far so good. Now, imagine that instead of seeing the logo of your software you see a black image. You, as a human can interpret that as a bug. The automated test cases cannot, as it will only check the login functionality. You as a manual tester will report this bug, the automated test case will happily say that all is passed. As long as you won’t add a test case which will just check the logo each time you will never automatically find this bug. Bad, isn’t it? However, there is a way to solve this problem without having to implement dedicated automated test cases. Visual comparison. This is something that an algorithm can do better than a human. Look at the picture below.

If you have kids, you probably know the game of spot the differences. How many differences can you find? How much time would it take? I have found 15 and it really took me some time. I am sure there are quite a few more.

How does spotting the differences apply to automatic test cases? It’s quite easy. Imagine you have executed your test case for the very first time. During the execution you have collected screenshots after executing each single test step. Those pictures should be your reference point.

Each time you will execute your test cases, your test automation solution should compare the screens with the reference screenshots and automatically detect the differences.

What is the result of the test case?

The valid question to ask is – what should happen to the automated test case result? There is no simple answer to that question. Depending on your approach you can either fail the test case (when you don’t expect a single deviation) or just throw a warning on the result. In the end what matters is that the visual difference doesn’t come unnoticed and that you will always be able to identify that something has unexpectedly changed in your software.

At TestResults.io we are using the Human Factor to give our customers the option to easily spot the changes in their UI. If you want to learn more email us or contact us directly.

Bonus

Just that you see how the UI differences could be shown for the user, look at how an output of a Human Factor algorithm could look like in reality.

Did you spot all the differences? 🙂

———————————

Wojciech Olchawa is a Product Manager and ISTQB certified Test Automation Engineer at progile, home of TestResults.io.

He worked as a SW Tester and Test Manager in various projects in the medical devices industry and airline sector. He applies his experience in software testing to shape TestResults.io and enable customers to benefit from high-class reliable Automated Testing.

Follow him on Linkedin where he likes to share his knowledge in videos or blog post.