With software becoming more complex and release cycles getting shorter, traditional testing methods are struggling to keep up. That’s where Generative AI (Gen AI) comes in. Instead of spending hours writing test cases or fixing broken scripts, teams can now use AI-powered tools to create tests, adapt to changes, and catch issues earlier—all with less manual effort.

Gartner predicts more than 80% of enterprises will have used generative AI APIs or deployed generative AI-enabled applications by 2026.

But this isn’t about replacing testers. It’s about making their lives easier, helping them focus on what matters: building faster software. Let’s look at how AI-driven testing is changing the game and what it means for QA teams today.

The Problems with Traditional QA

QA testing has long been a challenge for software development teams. While manual testing provides detailed human insight, it is slow, labor-intensive, and prone to human error. Although automated testing helps speed things up, it comes with its own set of issues, particularly in terms of script maintenance and adaptability.

- Time-consuming test creation and execution – Writing test cases from scratch is slow and requires significant effort. Running tests across different environments and devices adds further delays.

- Frequent script failures – Automated test scripts often break when applications undergo minor UI or functionality changes, leading to high maintenance efforts.

- Lack of scalability – As applications grow in complexity, maintaining comprehensive test coverage becomes difficult. Manual testing struggles to scale, and automated testing requires extensive upkeep.

- Reactive bug detection – Traditional testing often identifies defects late in the development cycle, leading to costly fixes and delays.

- High operational costs – The need for large QA teams, expensive testing tools, and ongoing maintenance increases the overall cost of software development.

- Limited test coverage – Manual and traditional automation approaches often miss edge cases or complex user interactions, increasing the risk of undetected bugs in production.

How Gen AI Transforms QA Testing

The limitations of traditional testing often result in slower development cycles, higher costs, and an increased likelihood of defects reaching end users. To stay competitive, organizations need smarter, more adaptive testing solutions—this is where Gen AI makes a difference.

- Automated Test Generation

Gen AI can analyze user stories, requirements, and past test data to automatically create test cases. This reduces the time testers spend writing scripts and ensures comprehensive test coverage. AI-generated tests can even include edge cases that might be overlooked manually. - Self-Healing Test Scripts

One of the biggest pain points in automated testing is script maintenance. When an application’s UI changes, traditional automation scripts break. AI-powered tools detect these changes and automatically update test scripts, minimizing manual intervention. - Smarter Defect Detection

AI doesn’t just run tests—it learns from past failures. By analyzing historical test data, Gen AI can predict where bugs are likely to occur, helping teams focus their efforts on high-risk areas. This means catching issues before they reach production. - Natural Language Test Execution

With AI-based test agents that understand Natural Language Processing (NLP), testers can write test cases in plain English instead of coding them. The AI converts these descriptions into automated test scripts, making test automation more accessible to non-technical team members. - Faster Regression Testing

Automating regression testing is crucial for agile teams. Gen AI enables continuous testing by quickly running thousands of test cases, providing real-time insights and reducing release cycles.

The Future of AI in Software Testing

AI-driven testing is evolving rapidly, and its adoption is expected to grow significantly in the coming years. According to market.us, the global AI in software testing market size is expected to be worth around USD 10.6 Billion by 2033, from USD 1.9 Billion in 2023, growing at a CAGR of 18.70% during the forecast period from 2024 to 2033.

Here’s what we can expect in the future:

- More advanced AI-powered test agents – AI test bots with enhanced NLP capabilities will allow even non-technical users to create and execute automated tests with minimal effort.

- AI-driven predictive testing – AI will analyze historical defects, system logs, and code changes to anticipate where bugs are likely to occur, allowing teams to focus their testing efforts more effectively.

- Increased adoption of self-healing tests – Self-healing scripts can automatically adapt to UI changes, minimizing the need for maintenance efforts and manual intervention..

- Seamless AI integration with DevOps pipelines – AI-driven testing will become a standard component of CI/CD workflows, accelerating software releases while maintaining high quality.

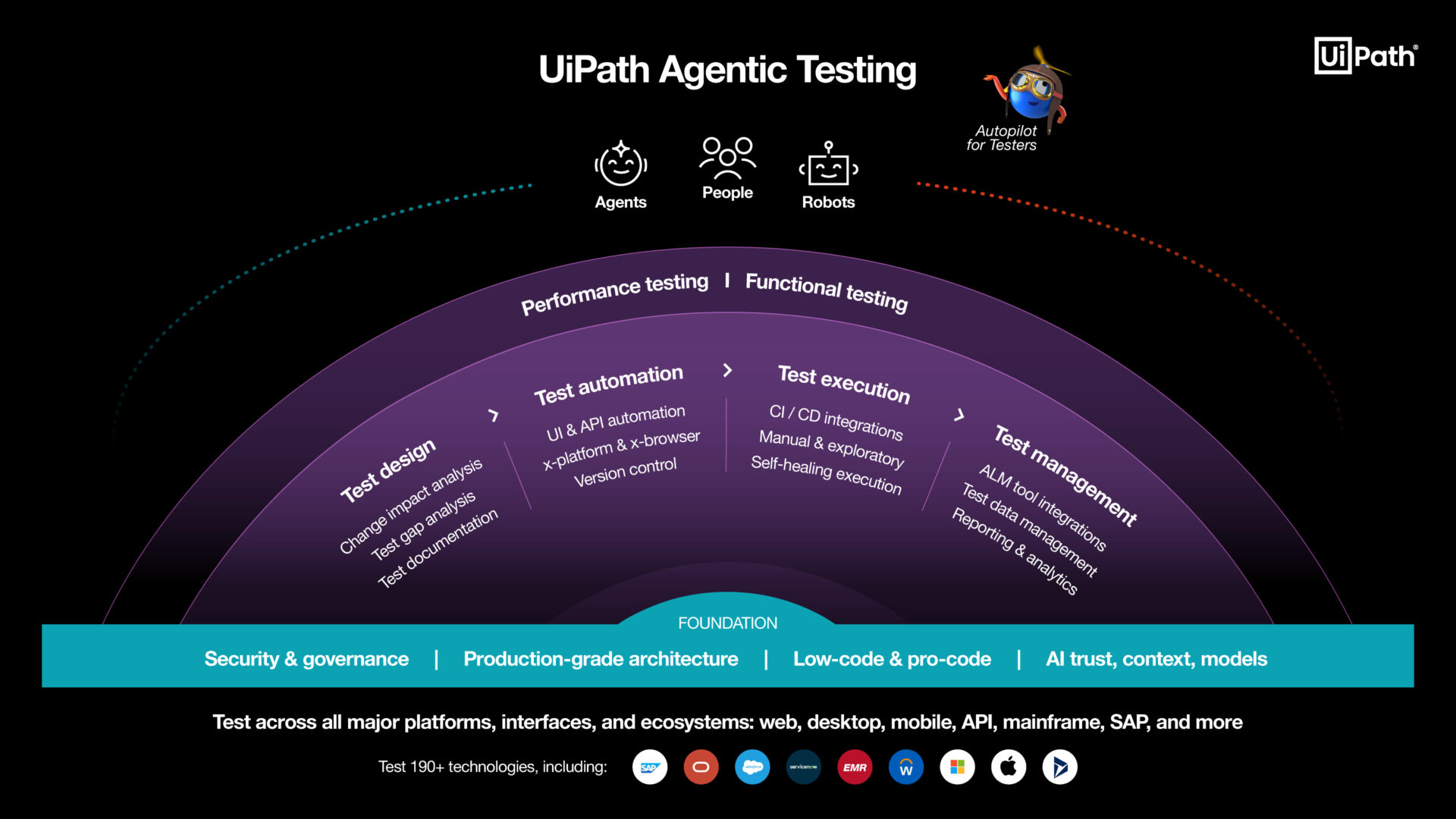

- Hyperautomation in QA – Combining AI with robotic process automation (RPA) and machine learning will create highly efficient, fully automated testing ecosystems.

As AI continues to improve, software testing will become more autonomous, intelligent, and efficient. Testers will shift their focus from repetitive execution to strategic decision-making, ensuring that AI complements human expertise rather than replacing it.

Gen AI Isn’t Here to Replace Testers—It’s Here to Empower Them

One of the biggest concerns surrounding AI-driven testing is the fear of job displacement. However, the reality is quite the opposite. AI is designed to amplify human capabilities, not replace them. Testers play a critical role in quality assurance, and AI is simply a tool to help them work smarter.

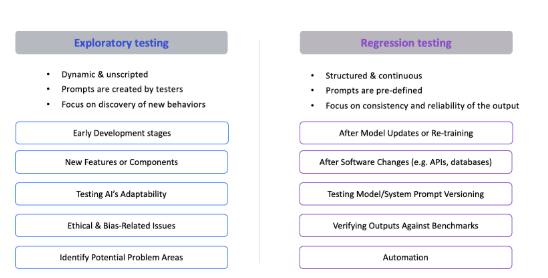

Instead of spending hours on repetitive test execution and debugging broken scripts, testers can now focus on exploratory testing, usability evaluation, and strategic test design. AI helps remove bottlenecks, speeds up the testing process, and allows teams to shift their efforts toward more valuable tasks.

Testers are no longer just bug finders; they are quality enablers. AI allows them to do more in less time, ensuring that software is not only functional but also user-friendly, accessible, and secure.

The Next Step in AI-Powered Testing

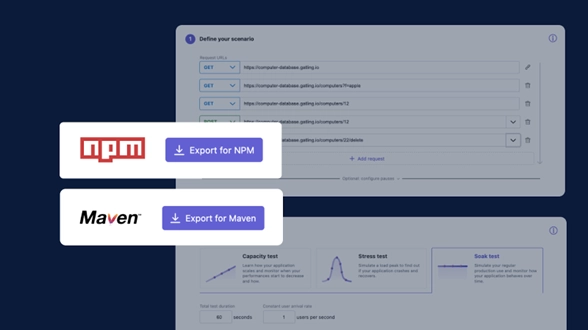

For teams looking to embrace AI-driven testing, tools like Kane AI offer a game-changing approach. As the world’s first AI-native QA Agent-as-a-Service platform, Kane AI simplifies test generation, automation, and debugging through natural language. By integrating seamlessly with existing workflows, it helps teams create resilient, scalable tests with minimal manual effort—empowering testers to focus on quality rather than maintenance.

The future of testing belongs to teams that adopt AI as a collaborative partner, leveraging its strengths while focusing on delivering high-quality and user-centric software. As Karim Lakhani said: “AI won’t replace you. But someone using AI will.” The key is to adapt, innovate, and lead with AI—because the future of testing isn’t just automated, it’s intelligent.

Author

Mudit Singh

VP of Growth & Product, LambdaTest

LambdaTest are Exhibitors in this years’ EuroSTAR Conference EXPO. Join us in Edinburgh 3-6 June 2025.