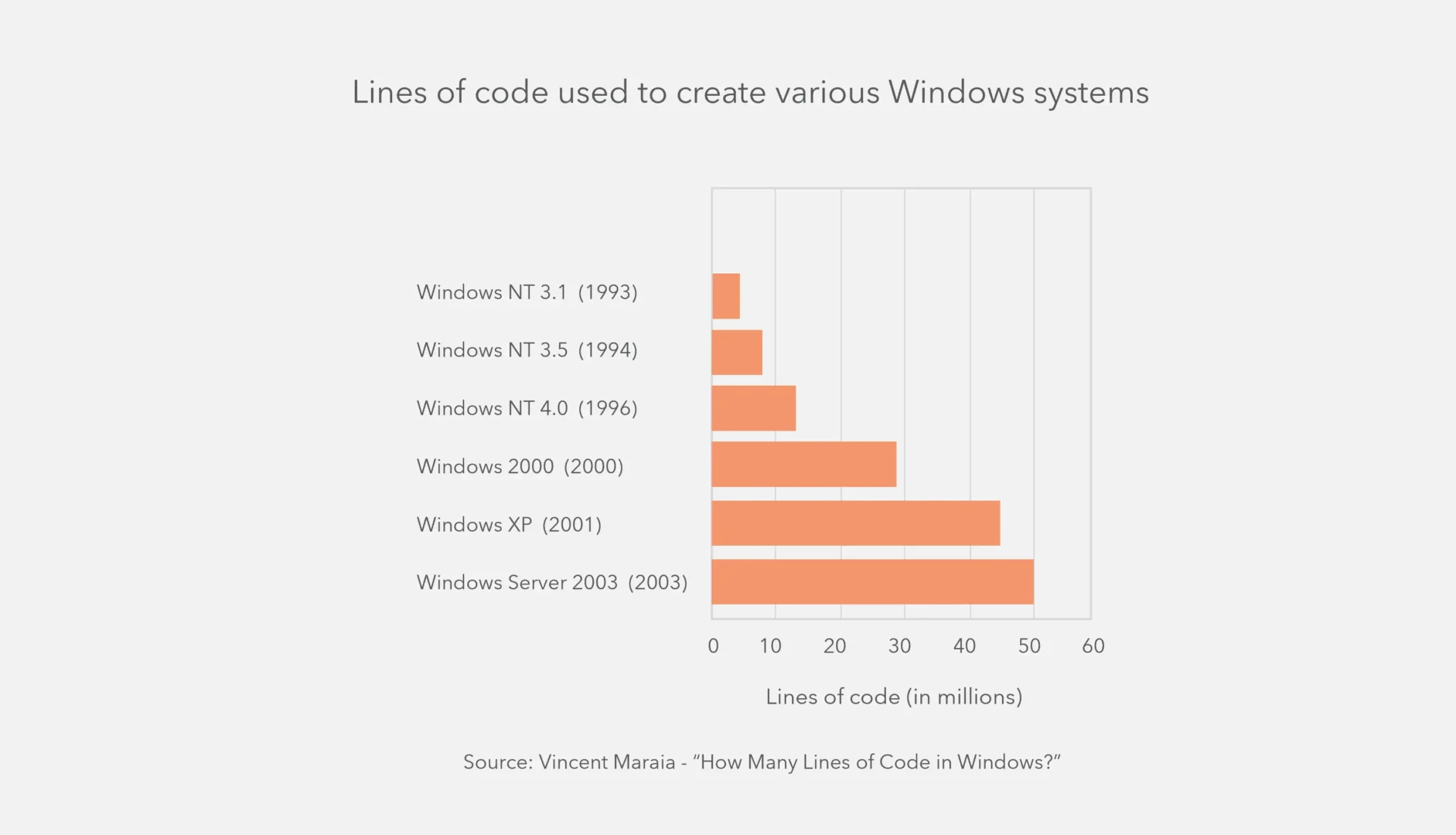

As the complexity of modern solutions increases, not only does the challenge for software developers grow, but so do the expectations for software testers. Although values such as ‘lines of code’ are not universally meaningful metrics, the following graph shows well where the journey is heading.

In the early days of computer technology, programs were relatively simple and straightforward. However, as technology advanced and software applications became more powerful, the size of code bases increased significantly. This is nothing wrong, of course, but it does provide new challenges.

Increased complexity – more bugs

As software has evolved, so has its complexity. Software systems now encompass complex architectures, sophisticated algorithms, and diverse integrations with various platforms and technologies. The demand for advanced functionalities, seamless user experiences, and robust security measures has led to the development of complex software applications. Managing this complexity requires careful design, modularization, and the adoption of architectural patterns to ensure maintainability and scalability.

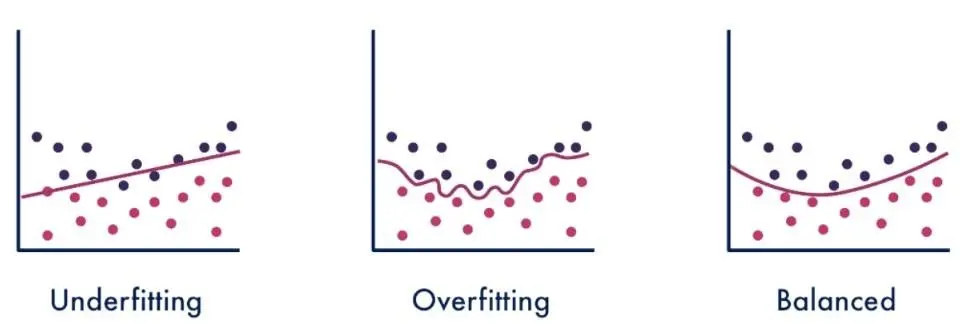

While software has become more sophisticated and powerful, the presence of bugs remains a challenge. As software grows in size and complexity, the potential for defects and errors also increases. The sheer volume of code, coupled with interdependencies between modules and integration with external components, introduces a higher likelihood of bugs.

The need for faster product delivery can impact product quality

Companies are increasingly under fire to deliver software with new functionalities and improvements to stay ahead of the competition. This puts pressure on all involved roles to deploy new code rapidly, which often results in reduced testing efforts and even more bugs in production.

In today’s competitive business environment, a company’s reputation is also closely tied to the perceived and experienced reliability of its services. Even the smallest of bugs can have a significant negative impact on a company. In conclusion, testing is a crucial discipline to identify bugs before they are discovered by the end-users to secure the company’s reputation.

The crucial role of testers in product quality assurance

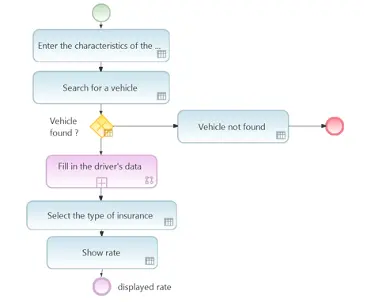

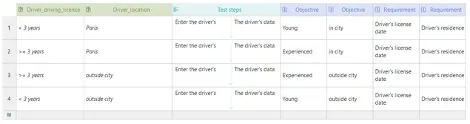

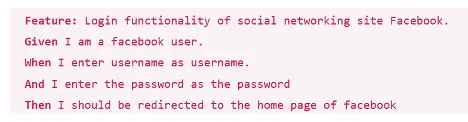

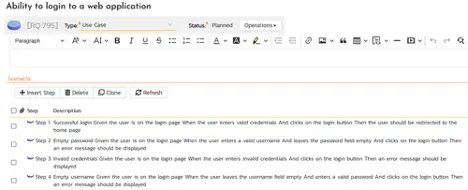

In this game, testers play a crucial role in safeguarding product quality by ensuring that the product meets the required standards in terms of functionality, reliability, usability, and more. This can’t be achieved just by clicking around in an application and reporting some “strange behaviors”. Testing should be a comprehensible activity based on specification, proven methods, and an overall understanding of the business context.

To ensure product functionality and quality, you need skilled people with a quality-focused mindset, who understand business processes as well as the implemented technologies and solutions. This work cannot be a “last minute” task that can be skipped in case of tight deadlines or done by any person “just available”.

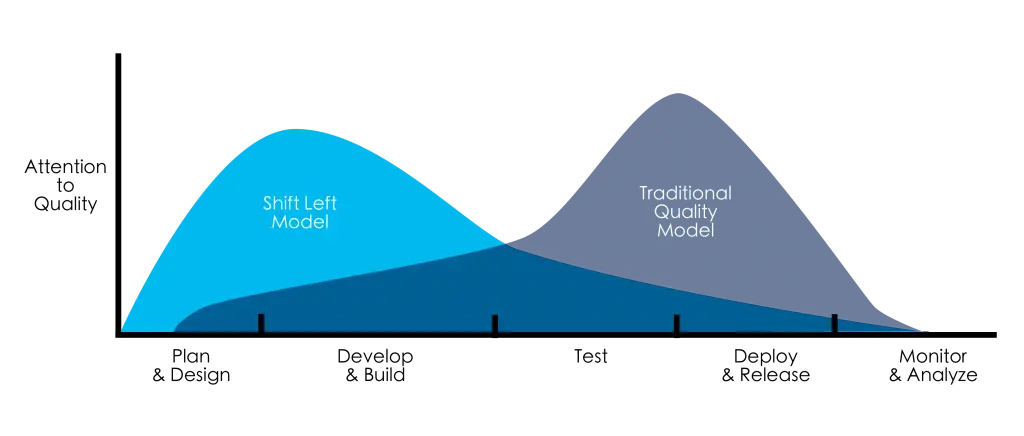

Shift testing to the left and involve testers from the beginning

So, how is it possible to implement quality assurance in such a way that it does not delay the delivery date and still has an actual effect?

This requires involving experienced testers who have the capability to analyze the use cases and IT solution design. Even more crucially, they should be involved from the very beginning of the process. By challenging business ideas and identifying missed elements or flows early on and even without code, testers can help avoid wasting unnecessary resources on functionalities that may need to be changed later. This calls for close collaboration between business, testers, and developers as one team.

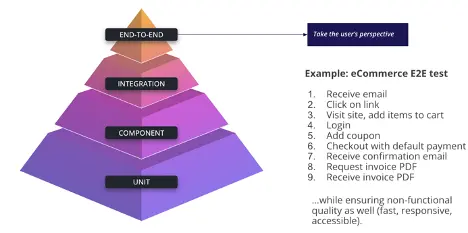

Starting testing early in the software development life cycle (SDLC) is known as the “Shift left” approach, and it comes from the idea of shifting test activities to the “left” in the development process timeline to ensure problems are detected as early as possible. Involving testers right away, starting with the requirements gathering stage, brings numerous benefits, such as early defect detection, faster feedback to developers, better collaboration between teams, and reduced business risk.

Efficient testing without excessive costs

In the realm of rapid code deployment, financial investments alone won’t solve quality issues. In addition to “Shift left” there are different methods that can ensure successful testing efforts without increasing the testing budget.

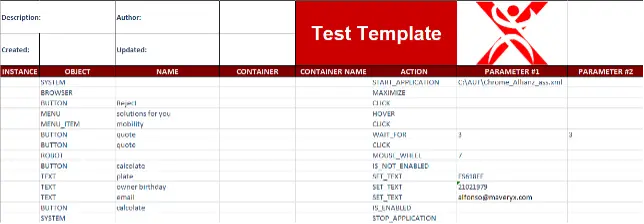

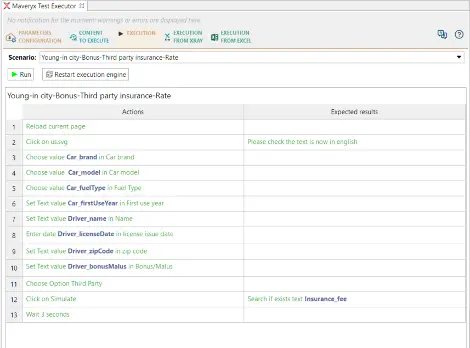

Testing isn’t just about speed; it’s a strategic blend of prioritization and disciplined collaboration. At Sixsentix, we advocate a disciplined testing approach centered on business-facing testing and business risk coverage.

With our risk-based methodology, we can clearly identify the processes and features with the greatest business impact, based on the frequency of use and potential damage that can occur as a result of failure. We perform a thorough analysis of the business, accurately determine specific business risks thanks to the combination of expertise and domain knowledge, and then prioritize testing of sensitive components.

This way, we make sure that key functionalities are swiftly checked and validated, and that the most pressing business risks are ruled out. Sixsentix combines this approach with the “Shift left”, which allows continuous testing and deployment after every change. This means that the latest updates are always released as soon as possible, without critical failures that could damage your reputation and business long-term.

Enhancing software quality through team dynamics

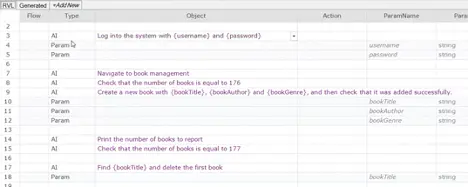

It’s also crucial to have a cohesive team composition, backed by a time-tested method. Our testing teams have been using our well-refined SWAT methodology, which enables them to use a systemic approach to testing.

Simultaneously, we abide by the “Tres Amigos” principle. It highlights the participation of three key roles: the product owner, the developer, and the tester, and emphasizes that these three roles are, in fact, one team, pursuing the same goals and priorities, which significantly boost the delivery of high-quality software.

Finally, in order to orchestrate and automate testing activities efficiently, we also designate a test architect for each project to define the overall testing strategy and framework and ensure they resonate with business objectives. This orchestrated effort significantly enhances the testing process, elevating software quality and ensuring a successful, reliable product.

Moving forward

Transforming quality assurance involves continuous improvement, robust collaboration, and the embrace of automation, propelling organizations towards a mature QA level where testing adapts to evolving requirements and aligns with changing organizational needs.

Let’s embrace efficient testing strategies and disciplined collaboration, striving for a future where software isn’t just fast and functional but also robust, reliable, and aligned with both user needs and business goals.

Author

Sixsentix

Sixsentix is a leading provider of Software Testing Services, QA Visual Analytics and Reporting, helping enterprises to accelerate their Software Delivery. Our unique risk-based Testing and QACube ALM Reporting and Dashboards, provide business with unprecedented quality and transparency across Software Delivery projects for faster time-to-market. Sixsentix customers include the largest banks, financial services, insurance, telecom providers and others. Sixsentix Onsite, and Nearshore (SWAT) services deliver optimized testing outcomes at significantly lower costs and help customers with scalability to keep pace with digitalization.

Sixsentix is an EXPO Exhibitor at EuroSTAR 2024, join us in Stockholm.