Economic uncertainty looms like a dark cloud over businesses, casting a shadow of unpredictability and challenges. From sudden market fluctuations to geopolitical events and policy changes, the business landscape is filled with obstacles that can cause budgets to shrink, timelines to shorten, and resources to become scarce. And it’s a global thing. The economic uncertainty that accompanies 2023 affects organizations all over the world and across different industries.

Effective test management can be a key strategy here, providing a solid foundation to reduce economic uncertainty and enable rapid adaptation to market changes. In this blog, we delve into the realm of test management and its remarkable potential to counter the adverse effects of economic uncertainty.

Understanding Economic Uncertainty

Economic uncertainty refers to a condition in which the future state of the economy, including factors such as growth, inflation, employment, and overall financial stability, becomes uncertain or unpredictable. Let’s break down the sources of uncertainty and the potential consequences following it.

Sources of Economic Uncertainty

- Market fluctuations: rapid shifts in supply and demand, changes in consumer behavior, or economic downturns can create a volatile and uncertain market environment.

- Geopolitical factors: political instability, trade conflicts, or regulatory changes can interrupt economic cycles and introduce uncertainty.

- Policy changes: changes in fiscal policies, tax regulations, or government interventions can impact business operations and investment decisions, leading to increased uncertainty.

- Global events: natural disasters and global economic or health crises (such as the COVID-19 pandemic) can significantly affect businesses worldwide.

Consequences of Economic Uncertainty on Businesses

- Lower consumer confidence: economic uncertainty can harm consumer confidence, leading to cautious spending patterns and a decline in demand for products and services. Companies might see lower revenue as customers are more likely to cut expenses.

- Financial instability: fluctuating market conditions and uncertain economic outlooks can pose financial challenges, including cash flow constraints, difficulty securing financing, or increased borrowing costs.

- Investment hesitation: economic uncertainty often makes businesses more risk-averse, causing delays in capital investments, expansion plans, or research and development initiatives.

- Supply chain disruptions: uncertainty can impact supply chains, causing disruptions in sourcing materials, increased costs, or delays in production and delivery.

The Value of Effective Test Management

Efficient software testing management can fulfill a vital role in mitigating economic uncertainty by providing businesses with structured approaches for quality assurance. The testing process is comprehensive and includes test planning, creation, execution, and defect management that are crucial to deliver high-quality software to the end-users.

Risk Management & Early Bugs Detection

When implemented effectively, test management plays a pivotal role in risk management and the early detection of bugs, benefiting companies in numerous ways.

By conducting thorough software testing, organizations can manage product-related risks by identifying and addressing them in the early stages of development. This proactive approach prevents these defects from escaping into production – when they are more costly to fix – and impacting the end-user’s experience. The end result is a reliable software product that meets business requirements and customer expectations.

High Flexibility & Adaptability

During uncertain times, project requirements may frequently change due to evolving market conditions or business priorities. Combining Agile practices in your software testing management enhances the organization’s ability to quickly respond to evolving requirements or changes in customer demands. Test managers collaborate with other stakeholders to understand the updated requirements, adjust test plans and strategies accordingly, and communicate any necessary changes to the testing team.

This way, companies can optimize software functionality and align it with shifting economic landscapes.

Combining Automation Testing

Automation plays a significant role in reducing costs and improving efficiency in software testing. Test managers leverage automation tools to perform tests that are prone to human error or extremely time-consuming. Businesses can significantly increase productivity and complete complex tests in a shorter time frame with high confidence, knowing the results are reliable. As automation eliminates the need for manual intervention, it minimizes the risk of human error and enables testers to focus on other critical aspects of the testing process.

Enhance Efficiency with a Test Management Platform

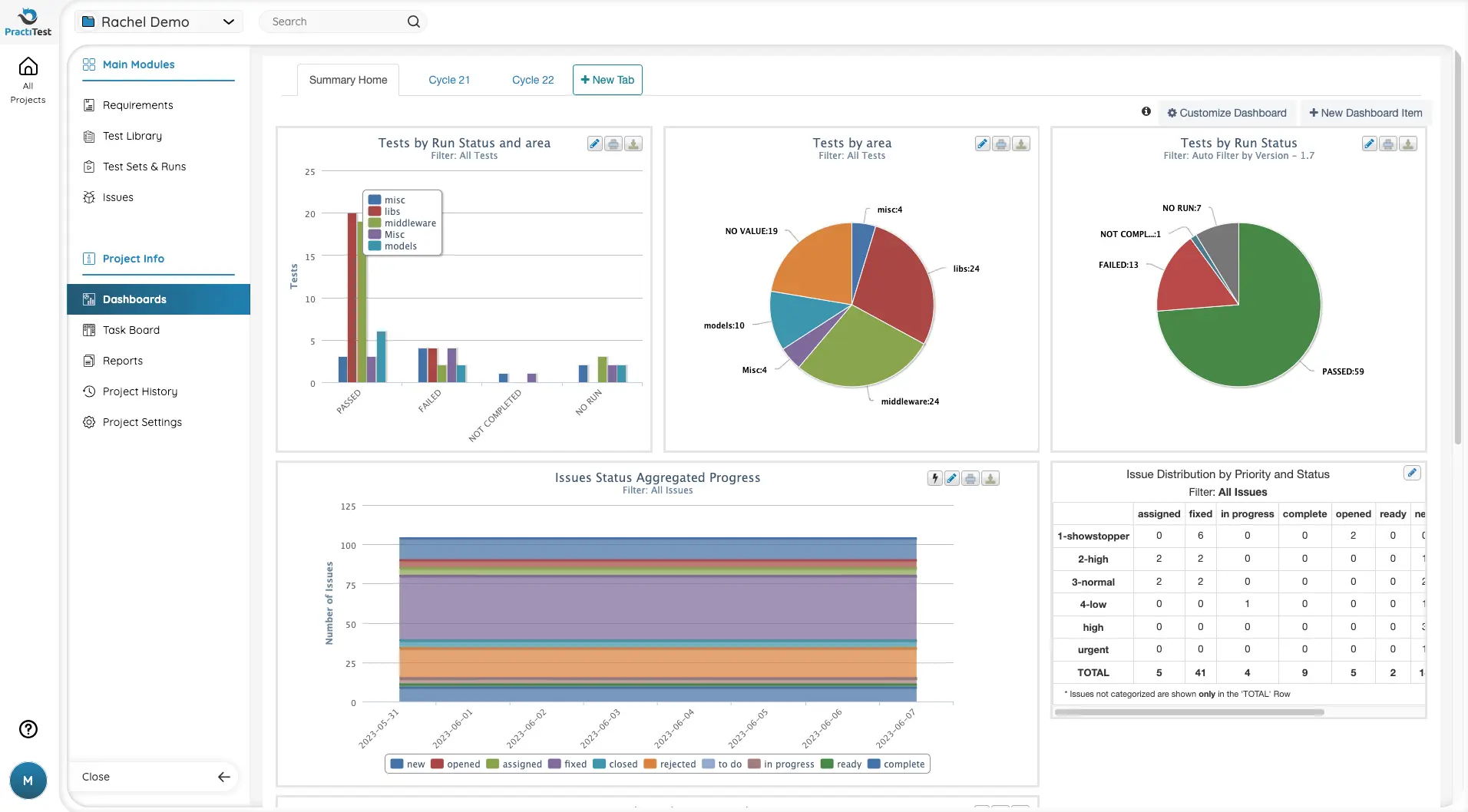

A great way to further improve the software testing management is using a dedicated test management tool. These comprehensive platforms offer a centralized solution for managing all types of testing activities such as planning, executing, tracking, and reporting. This helps to better manage test cases and defects, categorize them by status, prioritize them, and assign them effectively between staff that are on the same page.

One of the main benefits of these platforms is the reusability of tests. Rather than reworking and creating tests from scratch, QA testers can save precious time by reusing existing tests in other relevant projects or sprints. The tests that are designed for automated testing can be also managed through a test management platform. With powerful integration with automation frameworks and tools, QA managers can manage all types of tests within one platform and gain full transparency over the testing process.

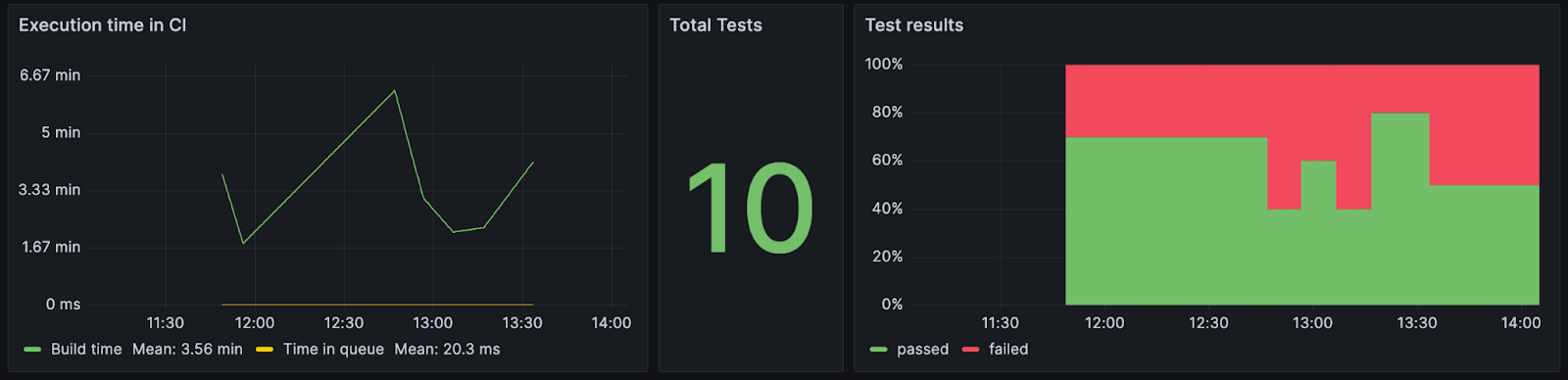

Test management platforms provide comprehensive reporting capabilities, enabling test managers to generate meaningful reports of different testing artifacts. These reports help identify bottlenecks, track important QA metrics, and enable data-driven decision-making for process improvement.

With a test management platform, test managers and teams can streamline and optimize their testing efforts, resulting in improved efficiency, enhanced collaboration, and higher-quality software.

3 Tips for Effective Test Management

Here are three tips to help you navigate through these challenges and ensure effective test management:

Understanding & Adjusting Objectives

As customer and business needs rapidly change during economic uncertainty, it is essential for QA managers to closely collaborate with stakeholders. By working together, they can gain a deep understanding of the evolving needs and align internal QA objectives accordingly.

Transparent communication and increased collaboration are key elements of aligning testing assignments with the dynamic requirements. Prioritizing testing tasks according to these needs ensures that limited resources are utilized effectively, optimizing efficiency and customer satisfaction.

Embracing Modern Agile Practices

Agile methodologies offer numerous benefits in uncertain times. With Agile principles, such as flexibility, collaboration, and shifting left, organizations can respond quickly to changing needs and adapt their testing processes accordingly.

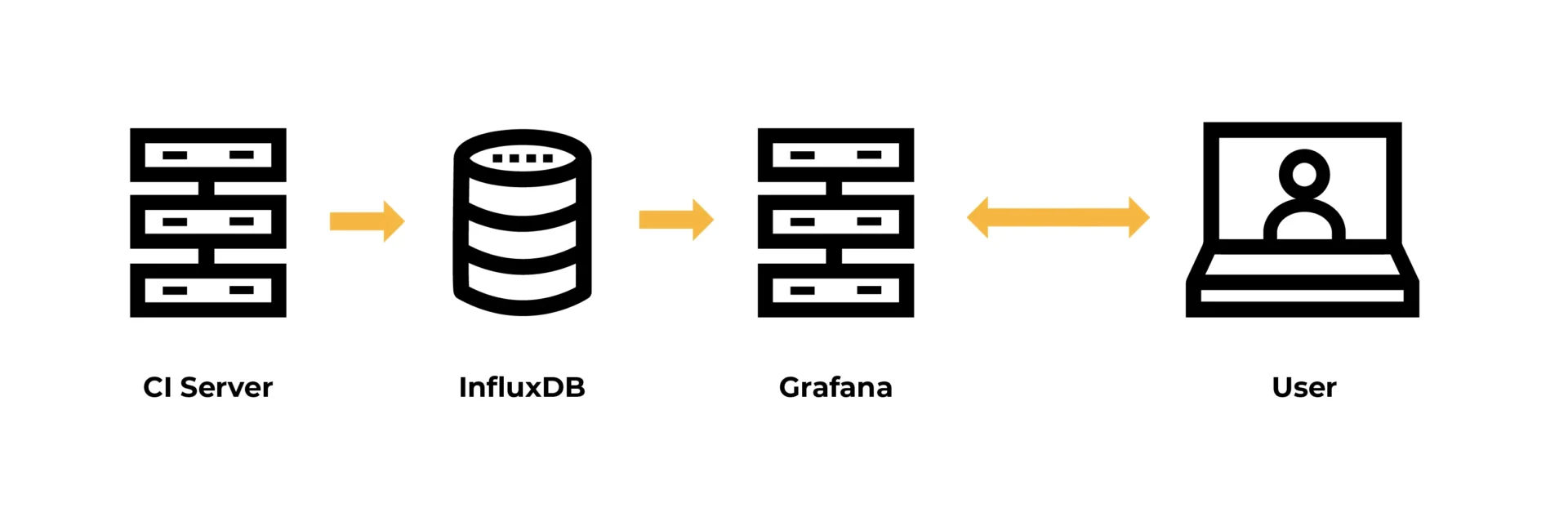

Incorporating concepts like Continuous Integration and Continuous Delivery (CI/CD) enables automated and frequent software releases, allowing for quick feedback and efficient bug fixes. Agile testing techniques such as exploratory testing, BDD, and automation further enhance adaptability and speed in a rapidly changing environment.

Embracing Modern Agile Practices

The final tip for effective test management is using a variety of testing tools. Utilizing multiple test automation and CI/CD tools covers diverse testing tasks, allowing for comprehensive and automated testing processes to be completed faster than ever. In addition, implementing a robust test management platform centralizes testing activities, streamlines collaboration, and provides a clear visibility into the testing progress from an end-to-end. The combination of testing tools will result in optimizing testing efforts and higher quality deliverables.

Summary

In the face of economic uncertainty, effective test management becomes essential for businesses to navigate challenges, mitigate risks, and deliver high-quality software products. In uncertain times, understanding dynamic customer needs, embracing modern Agile practices, and leveraging testing tools can help test managers better align with evolving customer requirements and enhance testing efficiency.

Additionally, leveraging testing automation tools and a robust test management platform such as PractiTest can increase productivity and ensure effective team collaboration. By implementing these strategies and adopting a proactive approach, organizations can navigate economic uncertainty with confidence, delivering reliable software that meets customer expectations.

Author

Practitest

Practitest is an end-to-end SaaS test management platform that centralizes all your QA work, processes, teams, and tools into one platform to bridge silos, unify communication, and enable one source of truth across your organization.

With Practitest you can make informed data-driven decisions based on end-to-end visibility provided by customizable reports, real-time dashboards, and dynamic filter views. Improve team productivity; reuse testing elements to eliminate repetitive tasks, plan work based on AI-generated insights, and enable your team to focus on what really matters.

Practitest helps you align your testing operation with business goals, and deliver better products faster.

Practitest is an exhibitor at EuroSTAR 2024, join us in Stockholm.

Author: Eduardo Amaral, Quality Management Senior Manager at

Author: Eduardo Amaral, Quality Management Senior Manager at