🎮 Imagine you’ve put in the work—conducting rigorous testing, analyzing systems, and ensuring exceptional software quality. But in a competitive industry, expertise alone won’t always be enough to set you apart. Employers and clients seek tangible proof of your skills, and that’s where professional certifications make the difference. They validate your expertise, strengthen your credibility, and unlock new career opportunities. It’s not just about what you know—it’s about demonstrating your mastery to the world.

Why Certifications? Because Expertise Deserves Recognition!

You might be a fantastic tester or an aspiring software quality pro, but how do you prove it? Certifications act like power-ups for your career. They:

✅ Validate Your Skills – Employers trust certifications as proof that you’ve mastered industry-recognized standards.

✅ Enhance Your Resume – Stand out in a competitive job market with credentials that highlight your expertise.

✅ Boost Your Confidence – Knowing you have proven, industry-backed skills strengthens your professional credibility.

ISTQB® – The Global Standard in Software Testing Certifications

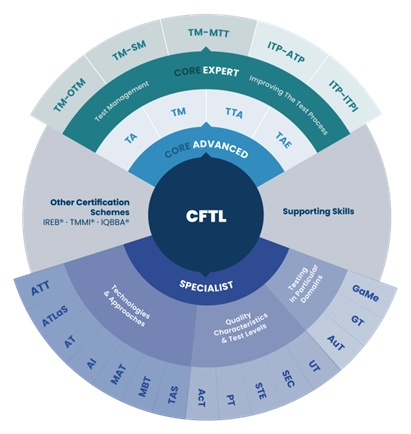

When it comes to software testing certification, there’s one name that rules them all: ISTQB® (International Software Testing Qualifications Board). Whether you’re a newbie or a seasoned tester, there’s a certification for you:

🎯 ISTQB® Certified Tester Foundation Level (CTFL) – The perfect starting point for your journey in software testing. Learn the fundamentals and build a strong foundation!

🚀 ISTQB® Agile Tester – Agile is everywhere, ISTQB® Certifications in Agile prove you can test like a pro in fast-paced development teams.

- Agile Technical Tester (CT-ATT) – Perfect for Agile teams, covering technical skills like TDD and CI/CD.

- Agile Test Leadership at Scale (CT-ATLaS) – Focuses on scaling Agile testing leadership across teams

🏆 ISTQB® Advanced & Expert Levels – Take your career to the next level with specializations in test automation, test management, security testing, and more!

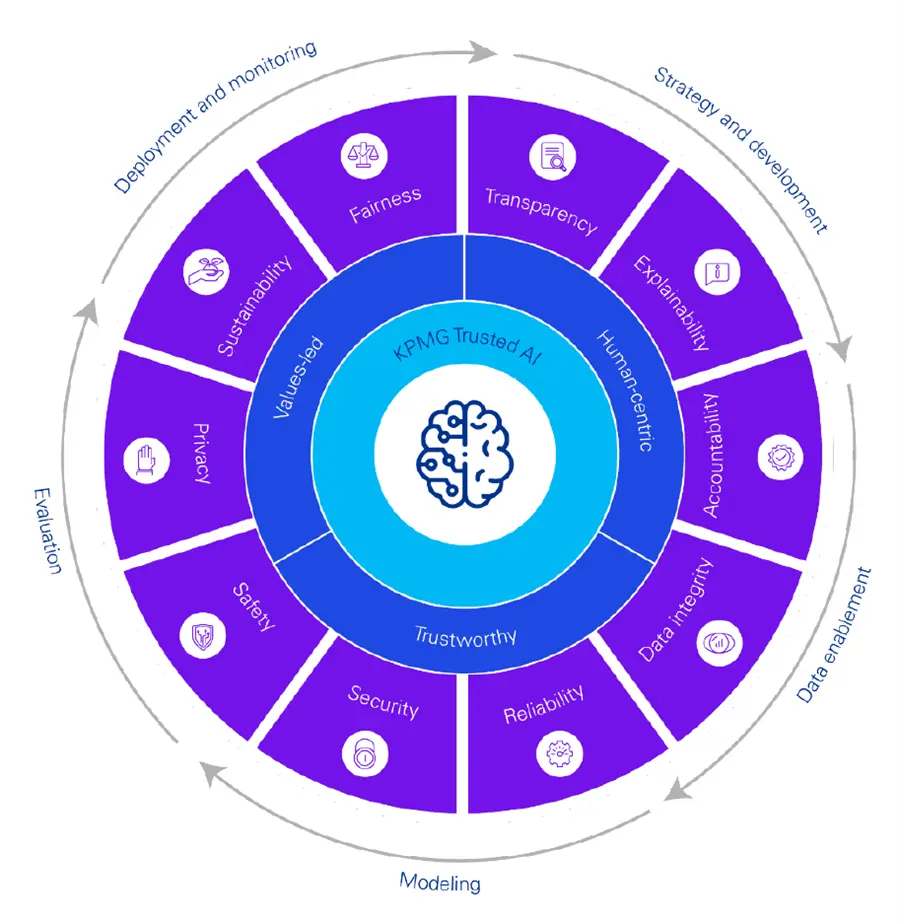

🔍 ISTQB® Specialist Certifications – Broaden your expertise with targeted certifications in areas like Mobile Application Testing, Usability Testing, Performance Testing, and AI Testing!

The best part? These certifications are recognized worldwide, giving you an edge no matter where you work. With over 1 million exams taken across 130+ countries, ISTQB® certifications have become the industry benchmark for software testing excellence.

How Does ISTQB® Certification Benefit You?

Certifications are more than just a title. They increase your earning potential and improve your job security. In fact, studies show that certified professionals earn higher salaries compared to their non-certified counterparts. Plus, in a competitive job market, having a certification could be the deciding factor between you and another candidate.

Here’s how ISTQB® certification can supercharge your career:

- Career Growth: Certifications open doors to promotions, leadership roles, and exciting job opportunities.

- Industry Recognition: Demonstrate to hiring managers and peers that you are committed to continuous learning and excellence in your craft.

- Networking Opportunities: Become part of an elite group of certified professionals and connect with industry experts.

- Competitive Edge: Differentiate yourself from other testers who rely solely on experience.

Looking to add even more weight to your testing expertise? The A4Q Practical Tester certification, now officially endorsed by ISTQB®, is your go-to choice! Unlike traditional theory-based exams, this certification is all about hands-on experience—because

real-world problems demand real-world solutions.

🔹 Learn by Doing – Dive into practical scenarios, case studies, and hands-on exercises designed to hone your critical thinking skills.

🔹 Bridge the Gap – Take your theoretical knowledge and turn it into effective, real-world testing strategies.

🔹 Boost Your Employability – Employers value testers who don’t just know their craft but can also apply it under real-life conditions.

Pairing your ISTQB® CTFL certification with an ISTQB® Add-On Practical Tester certification makes you a well-rounded professional, proving you’ve got both the knowledge and the skills to back it up.

How to Get Certified? Meet iSQI – Your Global Exam Provider!

So, where do you go when you’re ready to get certified? That’s where iSQI comes in! As a global authorized exam provider, iSQI makes it easy for you to:

🖥 Take your exam online from the comfort of your home.

🌎 Access exams in multiple languages across different regions—because software quality is a global language.

Your Next Move? Get Certified & Stand Out!

If you want to level up in your career, professional certifications aren’t just an option—they’re a game-changer. So, whether you’re just starting out or aiming for that next promotion, getting certified is one of the smartest moves you can make.

Here’s what you can do next:

✅ Research the best ISTQB® certification for your career goals.

✅ Visit iSQI’s website to find out how to register for an exam.

✅ Start studying and preparing for your certification—many online resources, practice exams and our special ISTQB exam preparation platform are available to help you succeed.

✅ Take the exam and showcase your new achievement!

💡 Ready to take the leap? Check out iSQI’s certification options and start your journey today!

Your skills deserve recognition. Your career deserves growth. Your future deserves the best. Get certified, and unlock new opportunities today! Success is yours.

Author

iSQI Group

iSQI are exhibitors in this years’ EuroSTAR Conference EXPO. Join us in Edinburgh 3-6 June 2025.