In today’s technology field it’s almost impossible to discuss the future without mentioning the big impact Artificial Intelligence (AI) will have, specifically Generative AI. The concept behind generative AI is not new, but has seen a breakthrough to a wider audience in recent years, with AI-tools such as OpenAI ChatGPT, DALL-E, Microsoft Copilot becoming available. Generative AI is able to – you guessed it – generate outputs such as text, images, audio and all of their derivatives, which up until recently were the exclusive domain of the human brain.

Generative AI is driving a wave of technological innovation that can be felt far and wide. This is no different for our own field of expertise: Digital Test Management. We’re currently still witnessing the early days of a transformation which will impact most aspects of how we test and validate the software applications we use every day. The importance of ensuring whether an application is working as designed and intended has always been paramount: how can we ensure the trust of people and organizations in products and services if we don’t have the evidence to show for it? Generative AI will play a big role in transforming our ways of testing, allowing people to work more efficiently, automating many tasks and greatly improve test coverage. In the end this will make it possible to find and fix more defects earlier, ensuring a much smoother – and in many cases safer – end-user experience.

To this end, we want to introduce the Deloitte Digital tester, an AI-driven test platform which is capable of supplementing human testers as an automated and independent tester across the entirety of the testing journey!

Introducing the Deloitte Digital Tester

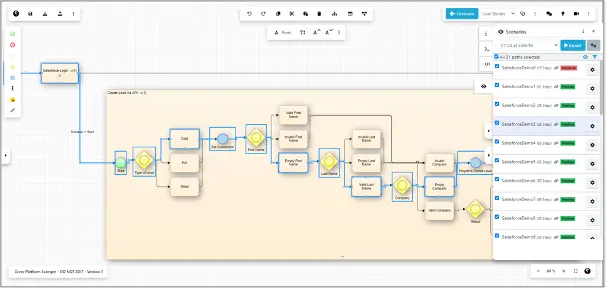

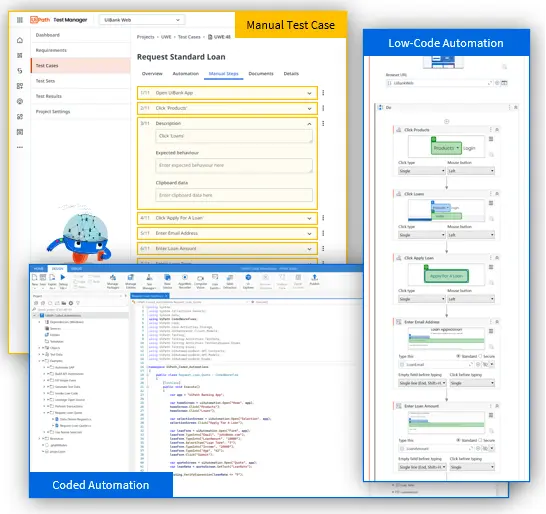

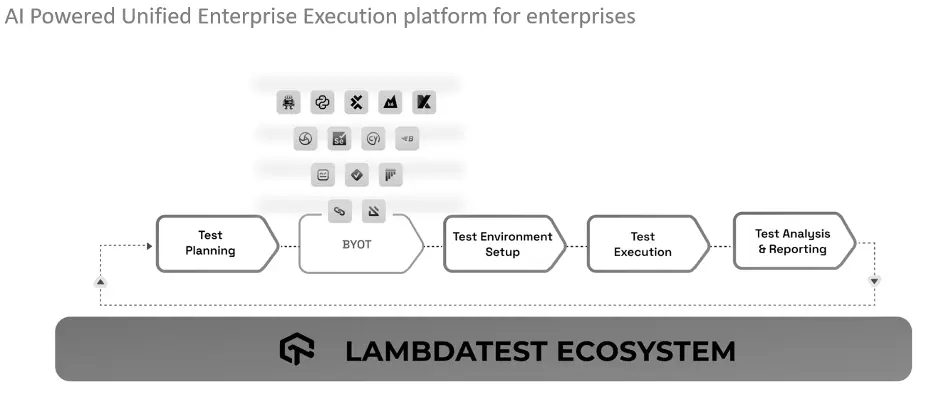

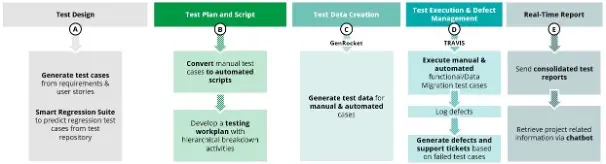

The recent advances have made it possible to pioneer a novel concept: a fulltime, AI-driven ‘digital tester’ that is capable of interpreting requirements and user stories to subsequently create test cases, execute them, log defects and finally report on the result. This is not science-fiction, but already reality. The Deloitte Digital Tester solution was built to operate across all phases of testing and fully integrates with existing testing tools and ecosystems. It can autonomously perform the following tasks:

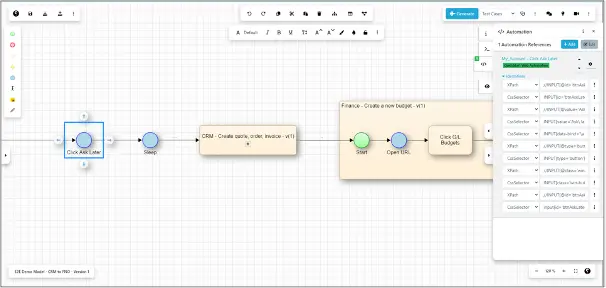

- Test Design: generate test cases from requirements and user stories.

- Test Planning & Scripting: generate automated test cases or convert existing ones.

- Test Data Creation: generate relevant test data to support test execution.

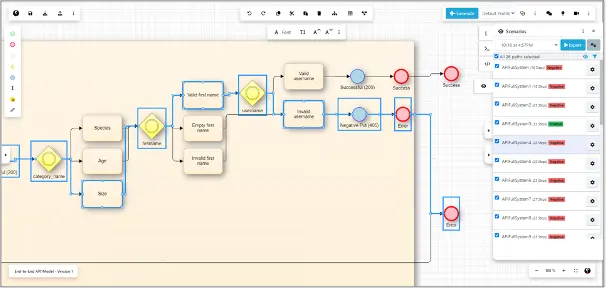

- Test Execution: execute test cases and validate the results, all to create defects and support tickets where required.

- Reporting: show clearly the test execution progress and outcome analysis by generating consolidated reports.

The Deloitte Digital Tester is not intended to replace human testers, but rather supplement them. This brings some key benefits: human testers will be able to focus on defining and validating business focused test strategies, design and architecture. They will be able to execute more value-adding test cases in parallel to the digital tester, evaluate the overall results and confirm the accuracy. Freeing up human testers also allows for more exploratory-based testing and validation of business interactions in the application. It also keeps testers motivated by automating many repetitive tasks and repeated regression testing. Additionally, the specific skills of the Deloitte Digital Tester allow the solution to do many additional test runs and eliminate blind spots, strongly increasing test coverage while reducing the overall time required to script and run tests.

Benefits of Next-Gen Automation

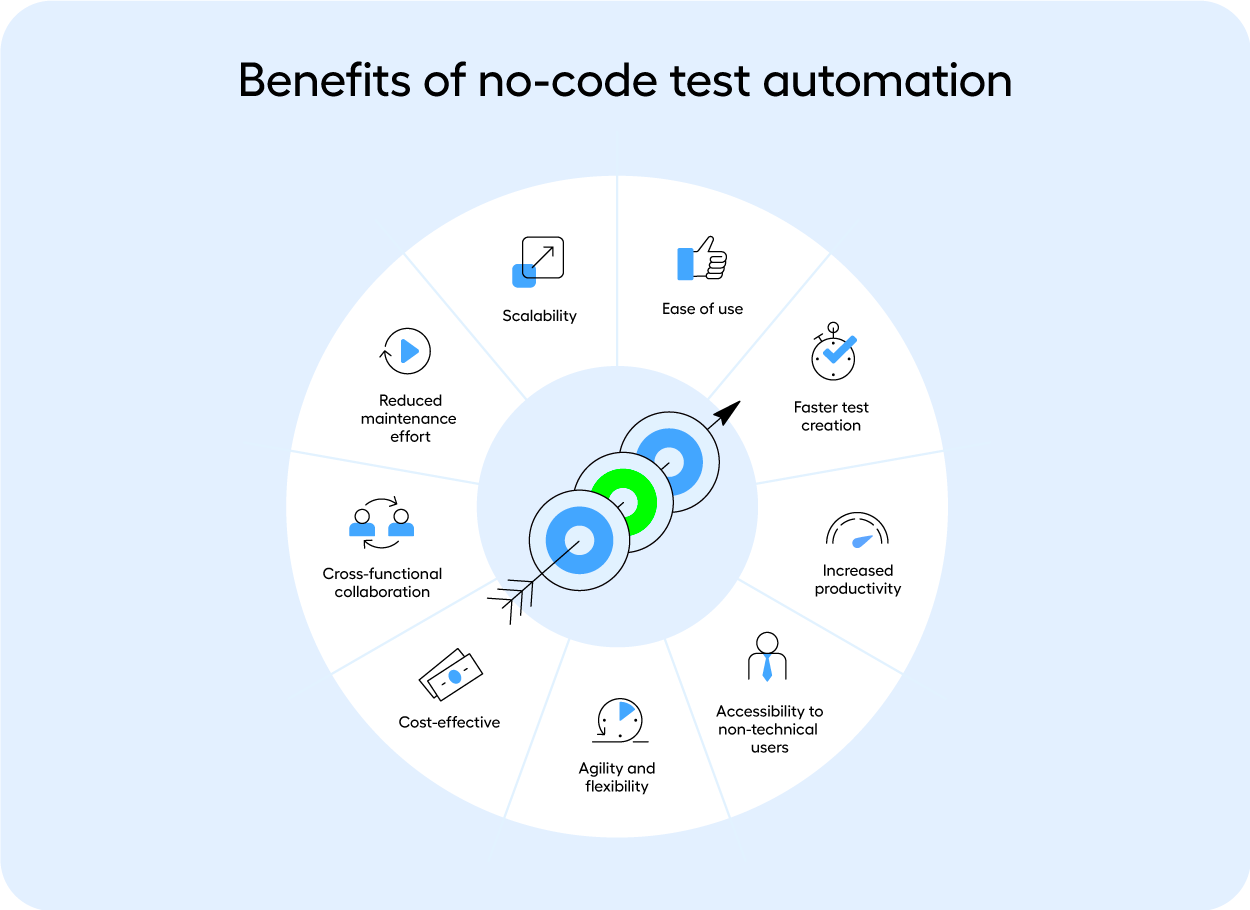

Our AI-solutions plays a key role in taking Test Automation to the next level. For several years already the concept of Test Automation has firmly rooted itself in the test management sphere: before we had AI, test cases were already being automated to be run as many times as required, and without human input other than creating the automated test cases in the first place and interpreting the results. This allowed for quicker test execution, high test case reusability, improved regression testing and even the creation of large amounts of test data to assist manual testing. With today’s AI-capabilities, the Deloitte Digital Tester is taking the next step: it is able to automate the creation and execution of test cases and evaluate the outcomes. This approach brings many benefits, the main ones we’ll go through here:

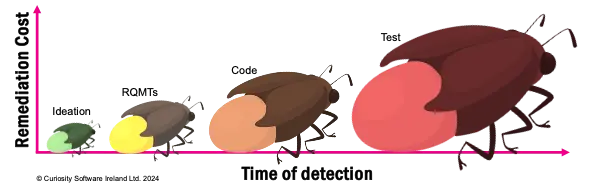

- Continuous Testing becomes a truly integral part of the software development process, rather than testing being handled during specific phases, often post-development. The Deloitte Digital Tester allows us to automate testing activities and ensure quicker execution and more efficient identification of defects. This can be realized through In-sprint automation, where test cases are created while development is still ongoing. These test cases can already expose defects that can subsequently be addressed as early as possible. The sooner a defect is found, the lower its impact and the cheaper the cost to fix it.

- AI/ML-based Test Data Management utilizes AI and machine learning to optimize the generation of representative test data while at the same time masking sensitive information such as personal data or confidential information. Integrating the Deloitte Digital Tester with the wider testing ecosystem makes the benefits even greater by increasing efficiency across the full landscape.

- Self-Healing allows automated test cases to automatically update themselves in response to changes in the application’s development. Traditionally automated test scripts required a certain level of human intervention to cover changes in the application being tested. The Deloitte Digital Tester is AI-enabled and scriptless, while at the same time employing machine learning algorithms to dynamically adapt to changes in the application, reducing the need for maintenance. This is particularly relevant in Agile CI/CD environments, where rapid iterations are dependent on efficient regression testing.

- Increased Test Coverage is realized by AI-driven automated testing. This allows for a scalable and broad-spectrum approach to validate end-to-end (E2E) processes. Additionally, AI algorithms enable us to identify and prioritize test cases based on their potential impact on critical business processes, thereby optimizing test coverage to focus on the areas with the highest risk.

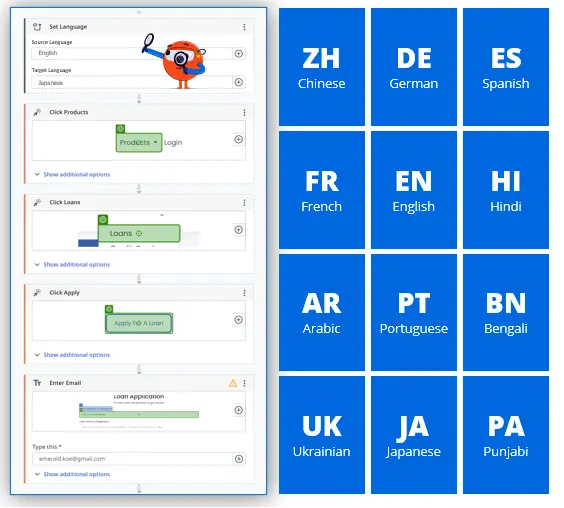

- Product Validation at Scale is a complex sounding term that signifies how the Deloitte Digital Tester enables organizations to industrialize testing by automating repetitive testing tasks and streamlining the testing process. By standardizing testing practices and creating reusable test assets, organizations can achieve consistency and efficiency in product validation efforts. Additionally, AI capabilities facilitate the analysis of test results and identification of trends or patterns across multiple product lines, enabling continuous improvement of testing processes and product quality.

An Impact that Matters

The above brings us to a key question: what impact does the Deloitte Digital Tester make once an organization chooses to implement it? Especially in case of projects with multiple releases the benefits can be very high when compared to the initial investment. What’s important to note is that the business case behind implementing the Digital Tester will depend on the testing maturity level of the organization. The stronger an organization’s testing capabilities are, the faster the Digital Tester will breakeven and start to provide ongoing benefits and efficiencies compared to traditional automation or manual testing. However, even in case of low maturity levels of adoption, the Deloitte Digital Tester allows an organization to break-even 2-4 months earlier compared to manual testing.

Let’s assume that an organization is running on average 500 test cases per month, and they choose to automate up to 80% of their testing lifecycle efforts.[1] If we are looking at a timeline of 1 year, we can distinguish 2 phases:

- Ramp-Up Period (4 months in case of high maturity): The Digital Tester requires an initial period during which the solution is trained, and its automation capabilities are being built. If more traditional testing is already going on during this time, this will require more resourcing to maintain these efforts in parallel.

- Benefit Realization Period (8 months & beyond): Once implemented, efficiencies and automation come into play. This drastically reduces any efforts to automate activities and sets the stage for manual testing to be much more focused and exploratory, for example cross-functional end-to-end-testing, business interaction testing and risk-based deep dives.

[1] The complexity distribution of test cases in this example is 30% simple, 30% medium, 20% complex, 20% very complex.

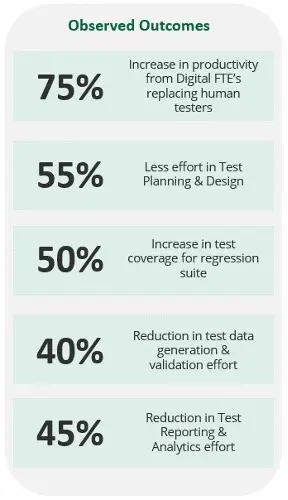

In this particular use case, we observe the following quality outcomes by increasing test coverage and next-gen automation:

Future-Proofing your Testing Capabilities

The advent of Generative AI, exemplified by the Deloitte Digital Tester, marks a big step forward in the evolution of software testing and quality assurance. As our world becomes ever more complex, increased consumer scrutiny, regulations and market trends are challenging organizations in many ways to be more fast and agile. In this context, it’s hard to overestimate the increased importance of testing new software applications in the most efficient and thorough way possible. The Deloitte Digital Tester can play a pivotal role in taking your testing capabilities to the next level. This enables you to ensure the smoothest and safest end-user experience for products and services, crucial to maintaining the trust of all stakeholders. It is often said that building a reputation takes years but losing it can happen in a matter of days or even hours.

Rising to meet these challenges, the Deloitte Digital Tester represents a pivotal shift towards a future where AI-driven automation augments human testing capabilities, upgrading the way we approach software testing. As organizations embrace this technological evolution, they not only future-proof their testing processes but also pave the way for innovation and excellence in the way they deliver their products and services.

Contact Details

Thomas Clijsner, Partner, Deloitte Risk Advisory

Tel: +32 479 65 06 96 Email: tclijsner@deloitte.com

Rohit Neil Pereira, Principal, Deloitte Consulting LLP

Tel: +1 916 803 0079 Email: ropereira@deloitte.com

Ramneet Singh, Director Deloitte Risk Advisor

Tel: +32 471 61 89 67 Email: ramnesingh@deloitte.com

Dirk Evrard, Manager Deloitte Risk Advisor

Tel: +32 472 75 92 03 Email: dievrard@deloitte.com

Deloitte is an Exhbitior at EuroSTAR 2024, join us in Stockholm