Let’s be honest. The dream of 100 percent test automation often turns out to be a nightmare. After all, it’s not just about writing a few scripts. Successful test automation needs to be well thought out, requires a test architecture and, above all, time. If you let the reins slip for even a few iterations, technical debt will creep in. In the worst case, the test cases become flaky.

Less spectacular, but just as painful in the long run, is gentle erosion. While the system to be tested is constantly evolving, the automated scripts lag behind. They may still run successfully, but over time they become less and less meaningful.

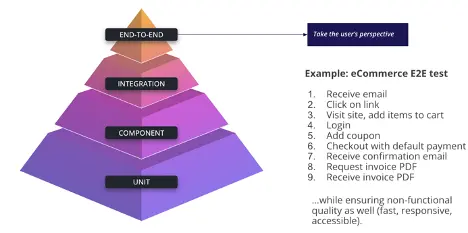

“Not true!”, you are probably thinking. “We do test-driven development.” That’s laudable, but probably only part of the truth. Because you certainly have them too – the functional manual testers who cover the upper part of the test pyramid and look at the system as a whole, perhaps even in interaction with other applications.

The Importance of Functional Testing

Functional testing is important. Of course, as much as possible should be checked during unit testing, but to be honest: the nasty bugs are hidden between the components. An example:

An app requires 2-factor authentication for login. Therefore, an 8-digit code consisting of letters and numbers is sent by email. Unfortunately, the app only allows numbers to be entered.

This example is not made up and illustrates the difference between unit and system testing. Each component complied with its specification and was tested successfully. Nevertheless, the overall system is unusable because the error was not in the code, but in the specification. Functional testers find such errors and that is what makes them so valuable.

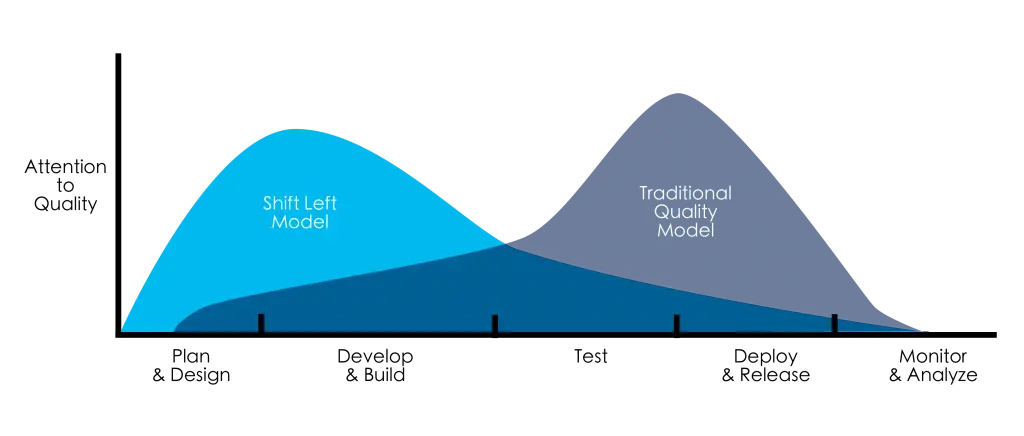

Manual testing has never been easy, but today, in the age of Agile, it’s a pure race against time. If they don’t want to be left behind, they need to understand the intricacies of automated functional testing. Because test automation relieves them of the repetitive regression tests and allows them to focus on the important things, such as new features.

Fundamental Principles of Effective Functional Test Design

In fact, there are several common principles that are important in both manual testing and test automation:

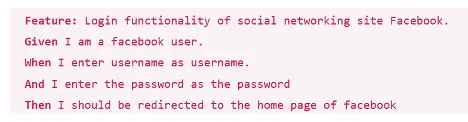

- Clarity : Clarity is key. Structured, easy-to-grasp tests improve comprehension and minimize ambiguity, benefiting all stakeholders. Visual diagrams have long helped developers simplify complex problems. Testing can benefit from similar visualization, as functional tests mirror the intricate nature of systems under test. Visual test design definitely improves clarity, making it easier for test automation engineers to understand the business aspects of functional testing.

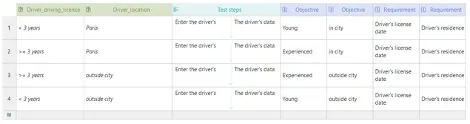

- Modularity : By breaking down complex scenarios into manageable test cases, manual testers can lay the groundwork for seamless automation, ensuring that each test remains a valuable asset throughout the software development lifecycle.

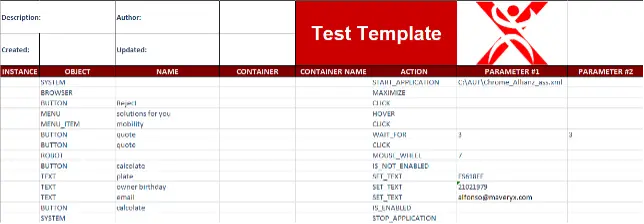

- Maintainability : Functional tests will continue to evolve, as will the associated test scripts. Some changes affect the technical level, others the functional level. Keyword-driven testing is a proven method of separating these two levels. Manual testers can thus contribute to the maintenance of automated tests without having to program themselves.

The goal of an effective, functional test design must therefore be to develop tests that are easy to maintain and update.

Transitioning from Manual to Automated Execution

Automation is often viewed as process optimization, as it takes over error-prone, repetitive tasks. Testers can use the time gained to focus on those tasks that require human judgment. Executing existing tests repeatedly following the accelerating rhythm of testing cycles is certainly not a mentally demanding task. The dumb writing down of test procedures is not a great intellectual accomplishment either. The real value lies in the preliminary considerations: What situations could occur? How should the system behave in this case? What can I do to push the system beyond its limits? Automation is therefore also a helpful and welcome support when creating test cases.

Studies[1] show that the combination of intellectual performance in test design and automated test execution not only increases test speed, but also the coverage of requirements and code. In general, the reliability of tests is increased when automated scripts support manual testers, especially in lengthy tests. This can be seen very clearly in load and performance tests, which are unthinkable without automation.

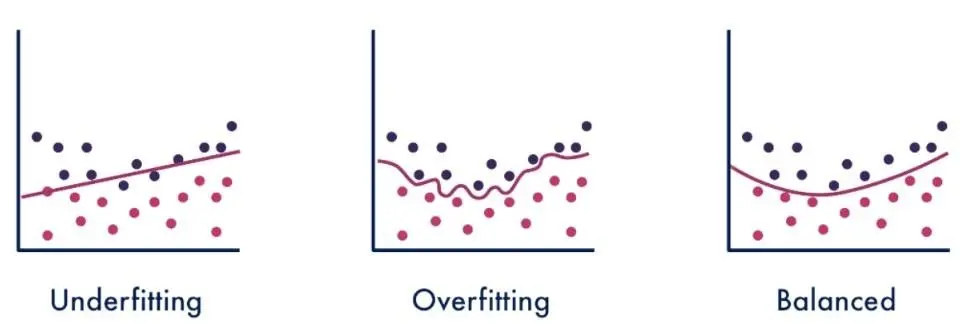

An intelligent automation strategy effectively balances human expertise with automated tasks.

A Practical Case Study – Yest Augmented by Maveryx

In this example, we show the successful marriage of two concepts represented by Yest and Maveryx.

Yest is a visual test design tool that implements a modern form of model-based testing (MBT) and test generation. Yest offers a whole range of functions to enhance the creative work of test design and speed up the painstaking task of writing test cases. Yest itself is agnostic, meaning that the generated scenarios may be used both for manual and/or automated test execution.

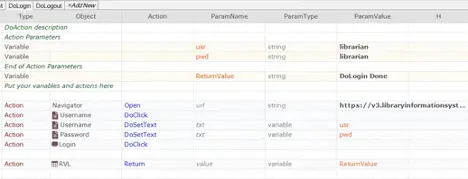

Maveryx is an automated software testing tool that provides functional UI, regression, data-driven and codeless testing capabilities for a wide range of desktop and Web technologies. Its innovative and intelligent technology inspects the application’s UI at runtime as a senior tester does. With Maveryx, there is no need for code instrumentation, GUI capture, maps and object repositories.

From Visual Test Design for Manual Tests…

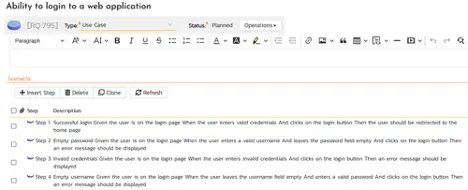

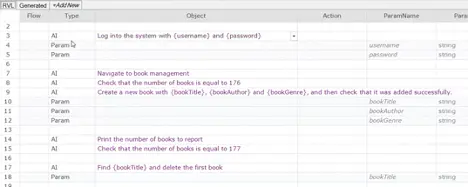

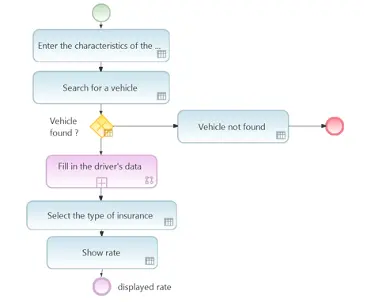

With Yest Augmented by Maveryx, the functional manual testers concentrate on creating graphical workflows and define actions including the expected results for the various cases to be tested. Yest then generates test scenarios from these workflows and the stored information, which can be executed directly manually.

…to Automated On-The-Fly Test Execution

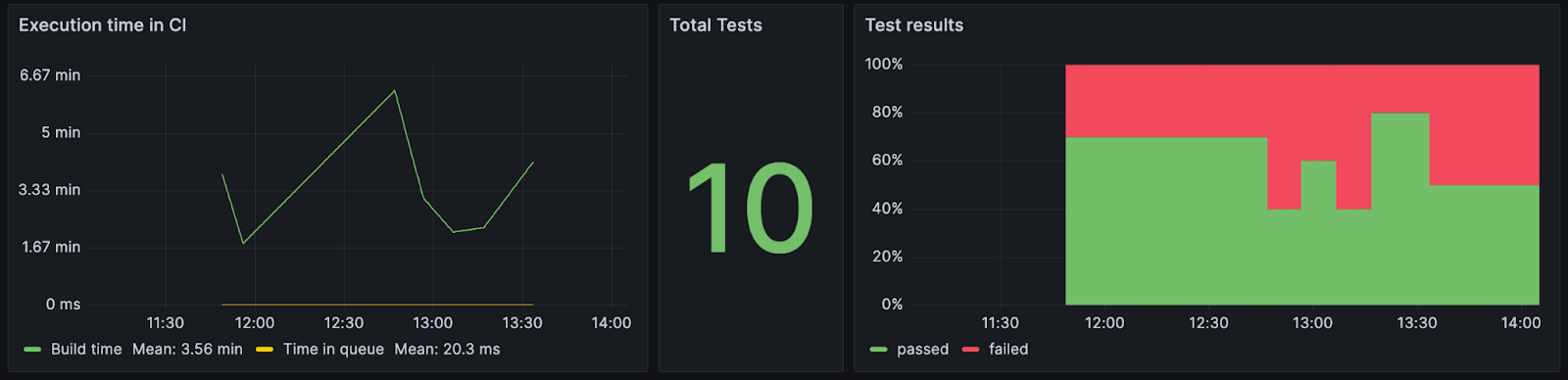

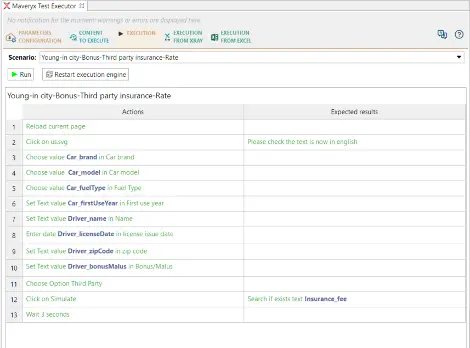

But can they also be automated and, if so, what results can we expect? This is where Maveryx comes into play. All you have to do is to provide a detailed instruction of manual steps in Yest. Yest Augmented by Maveryx recognizes these instructions for manual execution (for example “Click on Submit”) and executes them automatically.

If a step is not interpretable or fails, Yest Augmented by Maveryx stops and waits for manual input. You may then abort the test or do the necessary steps manually and continue. The execution results are reported in Yest Augmented by Maveryx or in the test management you read the test cases from.

Use Cases

Yest Augmented by Maveryx serves in various situations:

- Hardening the test procedure : Manual tester may execute their tests without having to code a single line of code. This provides them with rapid feedback on the quality of their tests. No more tedious analyses and hot disputes about whose fault it was.

- Executing manual tests directly from Xray or MS Excel : During official test runs, manual testers can call upon Yest Augmented by Maveryx to do part of the work. In fact, it is possible to import tests from Xray or MS Excel and execute them even without having used Yest Augmented by Maveryx for test design.

As functional manual testers embark on their automation journey, they will gain valuable insights into creating robust, maintainable test suites that stand the test of time.

Conclusion

This blog aims to guide manual functional testers through the complicated process of developing functional tests and harnessing the transformative power of automation. In an age where software development demands agility and speed, understanding the intricacies of automated functional testing is critical for testers looking to optimize their workflows.

Clarity, modularity and maintainability are key success factors for a successful transition from manual to automated test execution. Visual test design and model-based test case generation pave the way to structured tests and complete coverage. With the right tool support, it is possible to carry out these tests automatically without having to write a single line of code. Yest Augmented by Maveryx provides this support. Contact us to learn more at [email protected] or [email protected]

[1] Khankhoje, Rohit. (2023). Revealing the Foundations: The Strategic Influence of Test Design in Automation. International Journal of Computer Science and Information Technology. 15. 10.5121/ijcsit.2023.15604.

Authors

Alfonso Nocella, Co-founder and Sr. Software Engineer at Maveryx

Alfonso led the design and development of some core components of the Maveryx automated testing tool. He collaborated in some astrophysics IT research projects with the University of Napoli Federico II and the Italian national astrophysics research institute (INAF). Over the decades, Alfonso worked on many industrial and research projects in different business fields and partnerships. Also, he was a speaker at several conferences and universities.

Today, Alfonso supports critical QA projects of some Maveryx customers in the defense and public health fields. Besides, he is a test automation trainer, and he takes care of the communication and the technical marketing of Maveryx.

Anne Kramer, Trainer and Global CSM at Smartesting

Anne Kramer first came into contact with model-based test design in 2006. Since then she has been burning for the topic. Among other things, she was co-author of the “ISTQB FL Model-Based Tester” curriculum and lead author of the English-language textbook “Model-Based Testing Essentials” (Wiley, 2016).

After many years of working as a process consultant, project manager and trainer, Anne joined the French tool manufacturer Smartesting in April 2022. Today, she is fully dedicated to using models for testing purposes. This includes visual test design and, more recently, generative AI.

Maveryx is an Exhibitor at EuroSTAR 2024, join us in Stockholm.