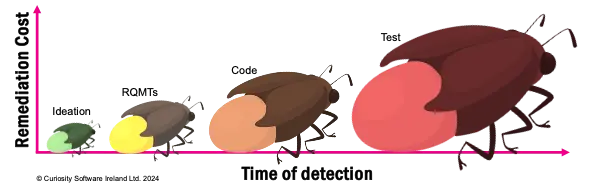

In today’s fast-paced world of software development, the need for effective testing has never been more important. Conventional approaches to testing are often challenged by rapid release cycles and complicated integrations, leading to slow delivery, high costs, and low software quality. Agentic testing is an innovative way to address these challenges. This transformative approach to software testing empowers testers with advanced AI capabilities, allowing them to automate much broader, more non-deterministic, and non-linear efforts in testing. AI agents take on the tedious and time-consuming tasks, providing a level of productivity that is not possible with conventional testing methods.

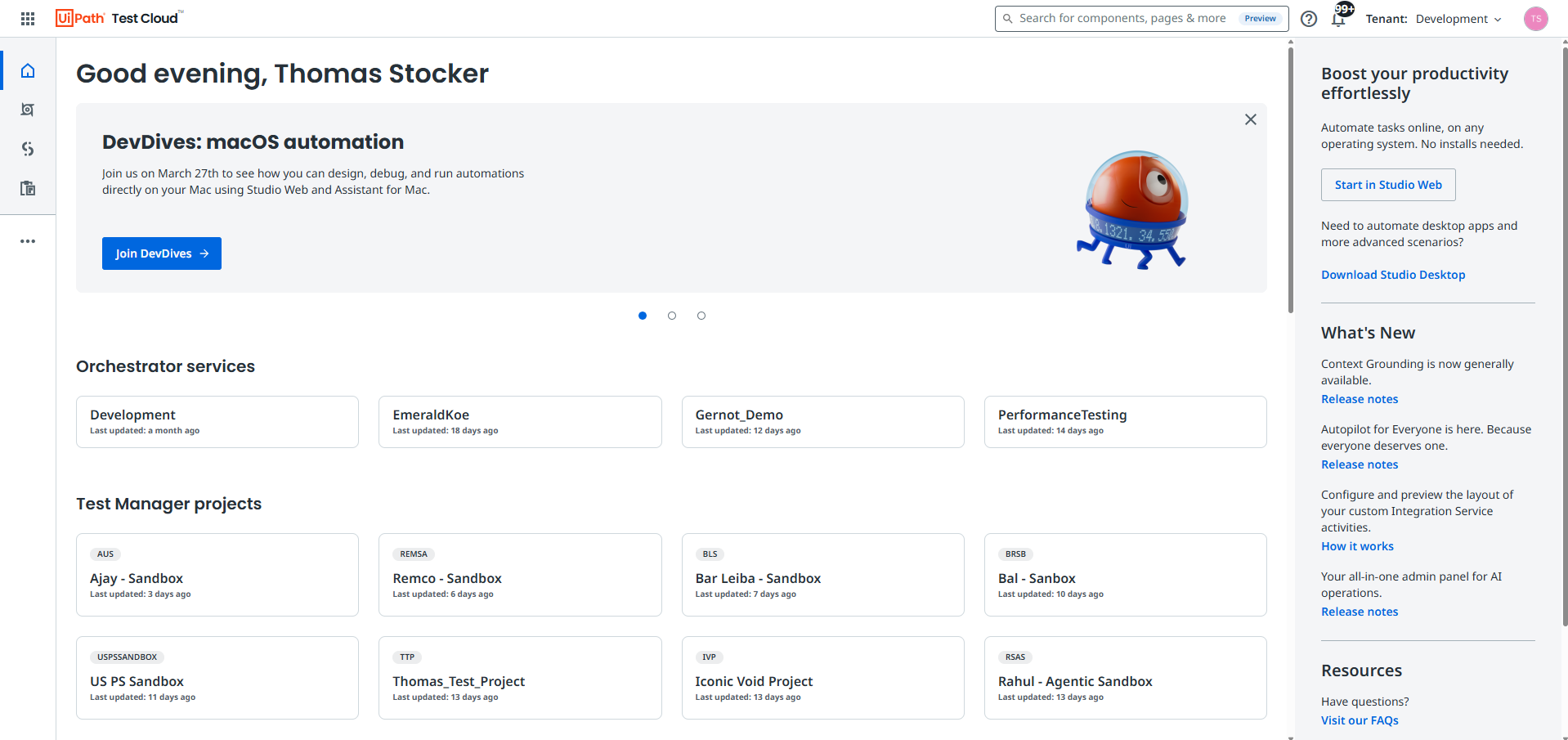

So how can you get started with agentic testing? We’re bringing agentic testing to life with the launch of UiPath Test Cloud, now generally available. UiPath Test Cloud—the next evolution of UiPath Test Suite—equips software testing teams with a fully featured testing solution that accelerates and streamlines testing for over 190 applications and technologies, including SAP®, Salesforce, ServiceNow, Workday, Oracle, and EPIC. Let’s take a closer look.

Comprehensive testing capabilities for the enterprise

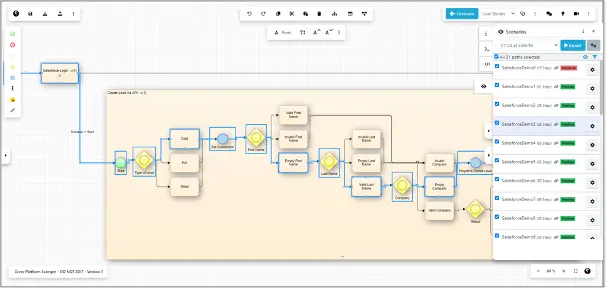

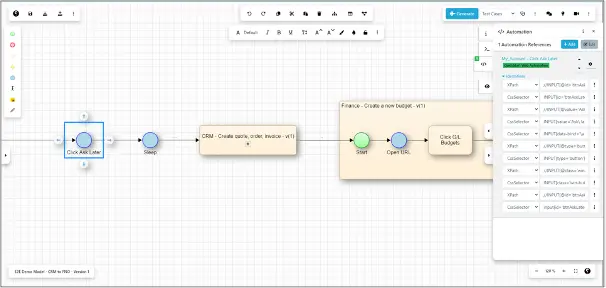

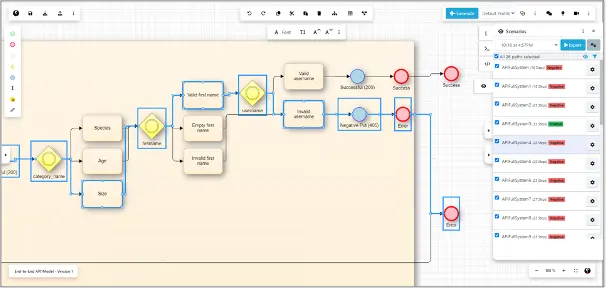

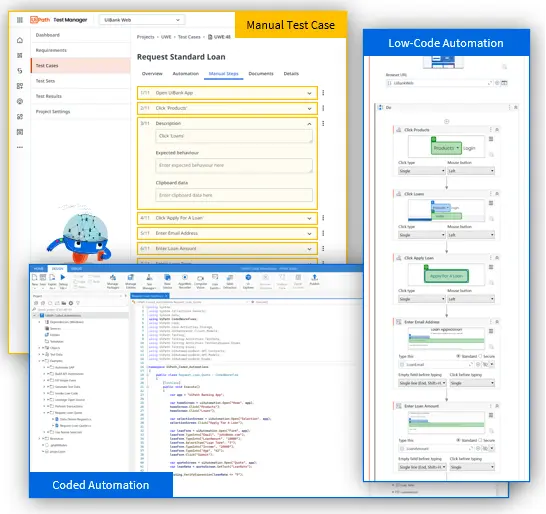

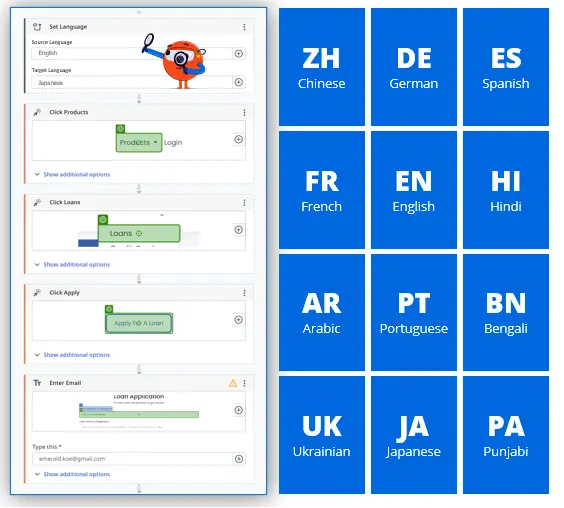

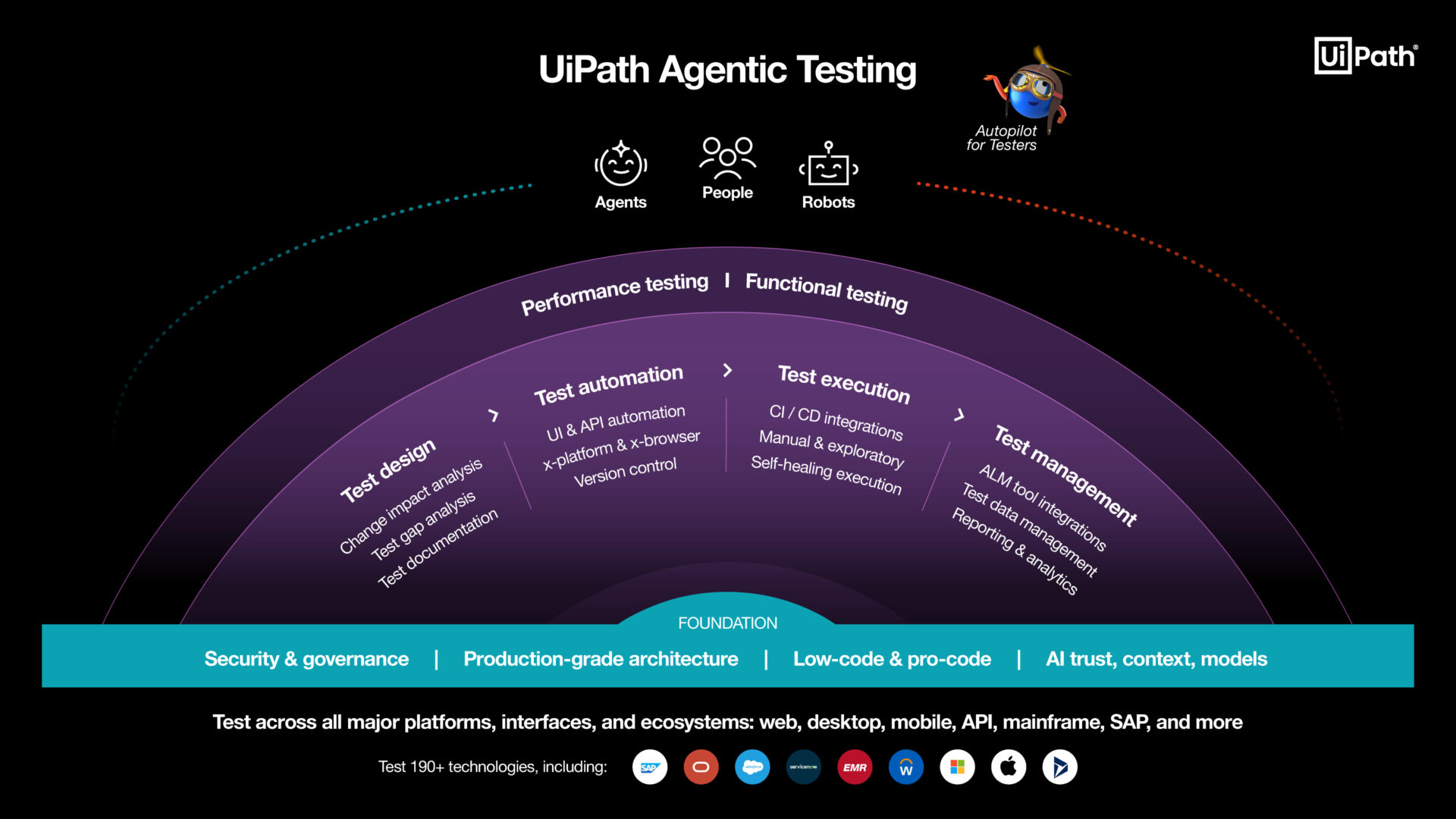

Test Cloud is an environment where software testers feel at home. It’s your solution for bringing agentic testing to life—augmenting you with AI agents across the entire testing lifecycle. Zooming in, Test Cloud is a fully featured platform designed to serve all your testing needs. Whether it’s functional or performance testing, Test Cloud empowers you with open, flexible, and responsible AI across every stage, from test design and test automation, to test execution and test management. And it’s built for scale—with everything you need to handle the largest and most complex testing projects. It helps you design smarter tests with capabilities like change impact analysis and test gap analysis, ensuring a risk-based, data-driven approach to testing. It gives you the flexibility to automate tests the way you want, whether it’s low-code or coded user interface (UI) and API automation, across platforms. Plus, with continuous integration and continuous delivery (CI/CD) integrations and distributed test execution, Test Cloud seamlessly fits into your ecosystem while accelerating your testing to keep up with rapid development cycles. And when it comes to test management, Test Cloud has you covered with 50+ application lifecycle management (ALM) integrations, as well as a rich set of test data management and reporting capabilities.

Unlock built-in and customizable AI for the entire testing lifecycle with UiPath Autopilot™ for Testers

What makes agentic testing truly agentic? AI agents. With UiPath Autopilot for Testers, our first-party AI agent available in Test Cloud, you’re equipped with built-in, customizable AI that accelerates every phase of the testing lifecycle.

Leverage Autopilot to enhance the test design phase through capabilities such as:

- Quality-checking requirements

- Generating tests for requirements

- Generating tests for SAP transactions

- Identifying tests requiring updates

- Detecting obsolete tests

Then, use Autopilot to take your test automation to the next level through capabilities such as:

- Generating low-code test automation

- Generating coded user interface (UI) and API automation

- Generating synthetic test data

- Performing fuzzy verifications

- Generating expressions

- Refactoring coded test automation

- Fixing validation errors in test automation

- Self-healing test automation

And enhance test management with Autopilot capabilities such as:

- Generating test insights reports

- Importing manual test cases

- Searching projects in natural language

Any type of tester—from a developer tester to a technical tester to a business tester—can use Autopilot to build resilient automations more quickly, unlock new use cases, and improve accuracy and time to value. Organizations are already yielding tangible benefits from this versatility and efficiency, as showcased by Cisco’s experience with Autopilot in accelerating their testing processes.

“At Cisco, our GenAI testing roadmap centers on leveraging UiPath LLM capabilities throughout the entire testing lifecycle, from creating user stories to generating test cases to reporting, while ensuring seamless integration with code repositories,” said Rajesh Gopinath, Senior Leader, Software Engineering at Cisco. “With the power of Autopilot, we’re equipped to eliminate manual testing by 50%, reduce the tools used in our testing framework, and reduce dependency on production data for testing.”

Build your own AI agents tailored specifically to your unique testing needs with Agent Builder

Now, let’s meet the toolkit for building AI agents tailored to your testing needs: UiPath Agent Builder. Leverage a prebuilt agent from the Agent Catalog, or build your own agent using the following components:

- Prompts: define natural language prompts with goals, roles, variables, and constraints

- Context: use active and long-term memory to inform the plan with context grounding

- Tools: define UI/API automations and/or other agents that are invoked based on a prompt

- Escalations: asks people for guidance with UiPath Action Center or UiPath Apps

- Evaluations: ensure the agent meets your desired objectives and behaves reliably in various scenarios

Looking for inspiration to jumpstart your first attempt at building an agent? Here are some recommendations for agents that you can build to help accelerate your testing:

- Data Retriever: helps find test data for exploratory testing sessions in databases

- Bug Consolidator: identifies distinct bugs behind failed test cases after nightly test runs

- Compliance Checker: finds test cases that do not adhere to best practice

- Stability Inspector: identifies flaky tests, repeatedly failed tests, and false positives

These are just a few agents that augment your expertise throughout the testing lifecycle. Join the Agent Builder waitlist to be the first in line to try your hand at building one.

Open, flexible, and responsible

Beyond AI agents, what does Test Cloud offer that helps you engage in agentic testing?

With UiPath Test Cloud, you can harness the power of an open and flexible architecture that seamlessly integrates with your existing tools, including connections with your CI/CD pipelines, ALM tools, and version control systems, as well as webhooks that keep you informed in real time. This flexibility ensures that Test Cloud adapts to your unique enterprise needs.

When it comes to responsible AI, you benefit from the UiPath AI Trust Layer, which provides you with explainable AI, bias detection, and robust privacy protection. You can confidently meet regulatory requirements and internal governance standards thanks to comprehensive auditability features. By embracing the open architecture and responsible AI capabilities of Test Cloud, you’re not just streamlining your testing process–you’re future-proofing your software quality with intelligent, efficient, and trustworthy technology that grows with your team’s needs.

Resilient end-to-end automation

With UiPath Test Cloud, you can unlock the power of resilient end-to-end automation that will enhance your testing processes. Experience seamless automation capabilities for any UI or API, giving you unparalleled flexibility in your testing approach. Whether you’re working with home-grown web and mobile applications or complex enterprise systems like SAP, Oracle, Workday, Salesforce, and ServiceNow, you can engage in automated testing that covers all aspects of your software ecosystem. By leveraging powerful end-to-end automation, you’ll not only improve the efficiency of your testing processes but also gain greater confidence in the quality and reliability of your software releases. Customers like Dart Container, Quidelortho, Orange Spain, and Cushman and Wakefield have achieved 90% automation rates, 30-40% cost reduction, 6X faster release speeds, and other significant benefits through using UiPath automated testing capabilities.

Production-grade architecture and governance

You and your team may face the challenge of maintaining a secure, scalable, and compliant testing infrastructure that can keep up with your agile development processes. With Test Cloud, you’re equipped with a production-grade architecture and robust governance features that will transform your agentic testing experience.

Benefit from Veracode certification, ensuring your testing environment meets the highest security standards and giving you peace of mind. Comprehensive auditing capabilities provides you with detailed insights into all testing activities, enabling you to maintain full transparency and easily demonstrate compliance. You also have granular role management features, allowing you to precisely control access and permissions, ensuring that the right people have the right level of access at all times. With centralized credential management, you can streamline security processes and reduce the risk of unauthorized access, making it easier than ever to manage and protect sensitive testing data.

Powered by the UiPath Platform

When you choose UiPath Test Cloud, you’re not just getting a standalone testing solution–you’re tapping into the power of the entire UiPath Platform™. This opens up a world of possibilities for streamlining your testing processes and boosting your overall automation efforts. You’ll benefit from shared and reusable components across teams, allowing you to leverage expertise and reduce duplication of effort. EDF Renewables, for example, achieved 75% component reuse by leveraging testing capabilities within the UiPath Platform. With access to the UiPath Marketplace, you’ll have a wealth of prebuilt solutions at your fingertips, accelerating your testing initiatives. Access to snippets and libraries empowers you to create modular, reusable code that can be easily shared and maintained across your organization. Plus, you can leverage centralized object repositories, which simplify test maintenance and improve consistency across your automation projects. Additionally, the robust asset management capabilities ensure that you can efficiently organize, version, and deploy your automation assets enterprise-wide, maximizing the value of your organization’s investment in the UiPath Platform™.

The benefits of agentic testing with UiPath Test Cloud

No matter your role or ranking at your organization, you can start reaping the benefits of Test Cloud for agentic testing right away. As a CIO, you’ll experience increased efficiency, reduced costs, and better resource utilization, ultimately leading to faster time-to-market and enterprise-wide automation. Testing team leads will benefit from improved consistency and reliability, increased productivity, and better defect detection, while standardizing testing processes and achieving unprecedented scalability. For testers, Test Cloud offers increased accuracy and efficiency, enhanced test coverage, and faster feedback loops, resulting in higher job satisfaction. The tangible benefits are clear: based on an in-depth study conducted by IDC, customers using UiPath for testing have achieved $4M average annual savings per customer, 529% three-year return on investment, and 6 months payback on investment.

With agentic testing powered by Test Cloud, all roles will enjoy accelerated test cycles, deeper test coverage, and reduced risk, all while realizing significant cost savings and resource optimization. This comprehensive and adaptive testing approach will empower your organization to deliver high-quality software faster than ever before, accelerating your time to value and giving you a competitive edge in today’s fast-paced software landscape. This vision of AI-augmented testing is not just theoretical; forward-thinking organizations like State Street are already anticipating how it will transform their testing processes.

The future of agentic testing

Test Cloud isn’t just built for the testing you know today—it’s built for where testing is going. With Test Cloud, you’re not just keeping up with increasing testing demands—you’re staying ahead. Get started with UiPath Test Cloud by signing up for the trial today.

Author

Sophie Gustafson

Product Marketing Manager, Test Cloud, UiPath