Traditional quality assurance is failing. Despite companies investing millions in testing infrastructure and achieving impressive unit test coverage – often exceeding 90% – critical production issues persist. Why? We’ve been solving the wrong problem.

The Evolution of a Crisis

Ten years ago, unit testing seemed like the silver bullet. Companies built extensive test suites, hired specialized QA teams, and celebrated high coverage metrics. With tools like GitHub Copilot, achieving near-100% unit test coverage is easier than ever. Yet paradoxically, as test coverage increased, so did production incidents.

The Real-World Testing Gap

Here’s what we discovered at SaolaAI after analyzing over 100 companies’ testing practices:

- Unit tests create a false sense of security. Teams mock dependencies and test isolated functions, but real-world failures occur at system boundaries.

- Microservice architectures exponentially increase complexity. A single user action might traverse 20+ services, creating millions of potential failure combinations.

- The “No QA” movement, while promoting developer ownership, has inadvertently reduced comprehensive testing.

The E2E Testing Paradox

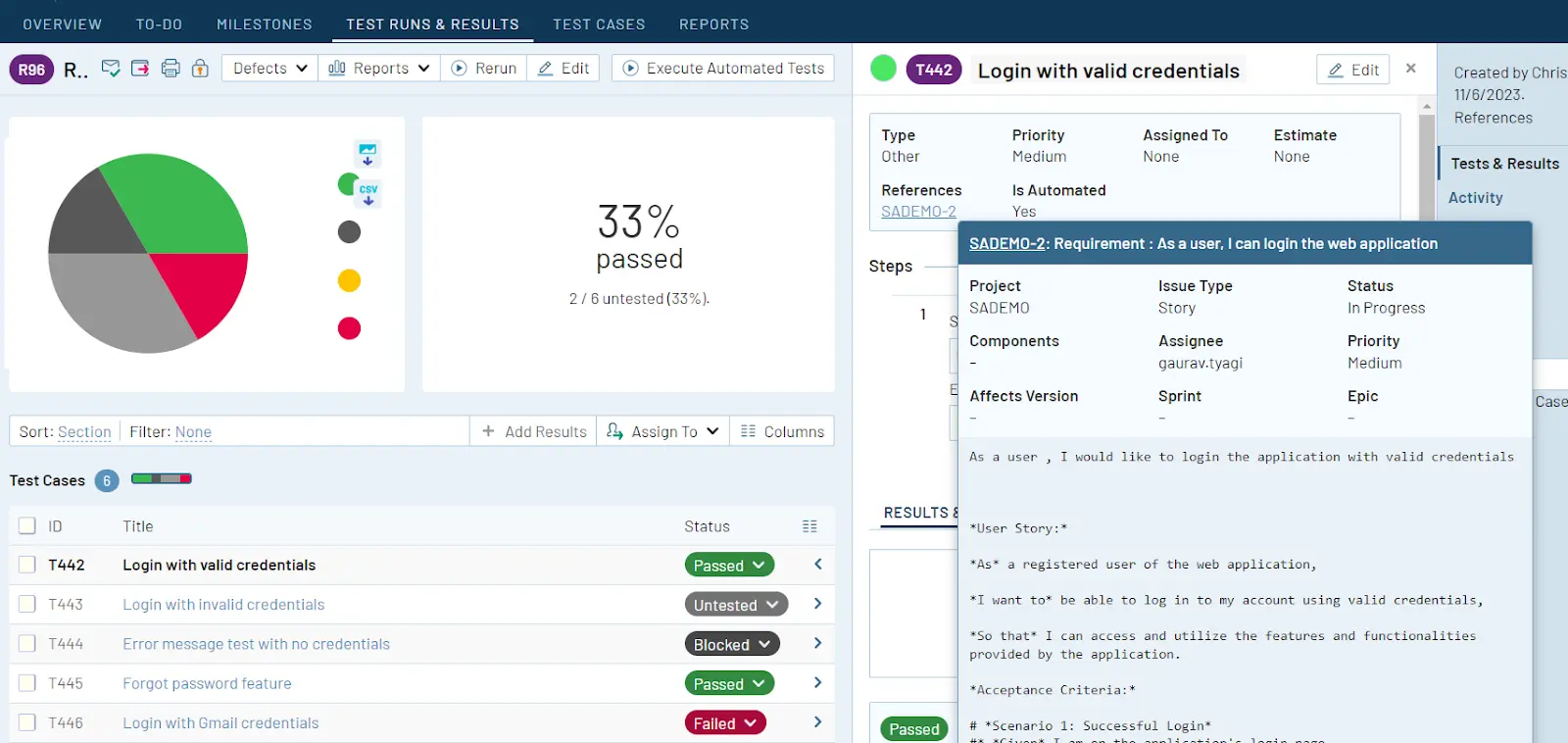

End-to-end testing is essential for verifying that complex systems function seamlessly, yet companies struggle with major obstacles. Setting up E2E environments can take months, while maintaining test data often turns into a full-time job. Integrating these tests into CI/CD pipelines requires specialized expertise, adding another layer of complexity.

On the technical side, flakiness remains a persistent issue, with failure rates reaching 30-40%. Browser updates frequently break test suites, while asynchronous operations and timing inconsistencies introduce further instability. These challenges make E2E testing notoriously difficult to manage.

Beyond the technical barriers, cultural resistance slows adoption. Developers often see E2E testing as solely QA’s responsibility, while product teams prioritize feature development over test reliability. When test suites fail, they are frequently ignored or abandoned rather than fixed, leading to gaps in test coverage and overall software quality.

The AI-Driven Future

Fortunately, modern solutions are emerging that leverage AI to revolutionize testing: from automated test generation based on user behavior, self-healing tests that adapt to UI changes to Intelligent test selection to reduce runtime. The future with AI is bright and looks promising.

The Way Forward

Quality isn’t just about test coverage – it’s about understanding how systems behave in production:

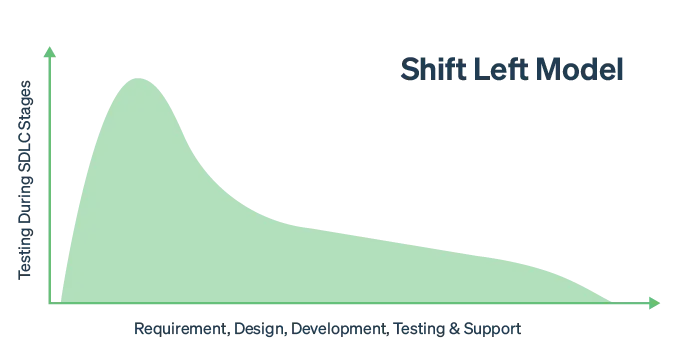

- Shift from code coverage to interaction coverage

- Integrate observability with testing

- Use ML to predict failure scenarios

- Automate maintenance of test suites

For too long, we’ve treated quality as a coding problem. It’s time to recognize it as a data problem. By combining AI, machine learning, and traditional testing approaches, we can finally bridge the gap between unit test success and production reliability.

The next evolution in software quality isn’t about writing more tests- it’s about making testing intelligent enough to match the complexity of modern applications.

This is the challenge that inspired SaolaAI: making quality as sophisticated as the systems we’re building. The question isn’t whether AI will transform testing, but how quickly companies will adapt to this new paradigm.

Author

Arkady Fukzon

CEO and Co-Founder, SaolaAI

Saola are exhibitors in this years’ EuroSTAR Conference EXPO. Join us in Edinburgh 3-6 June 2025.